Automotive lighting is evolving, says Yole

As lighting becomes an active driving feature, the market is expected to reach $38.8 billion by 2024

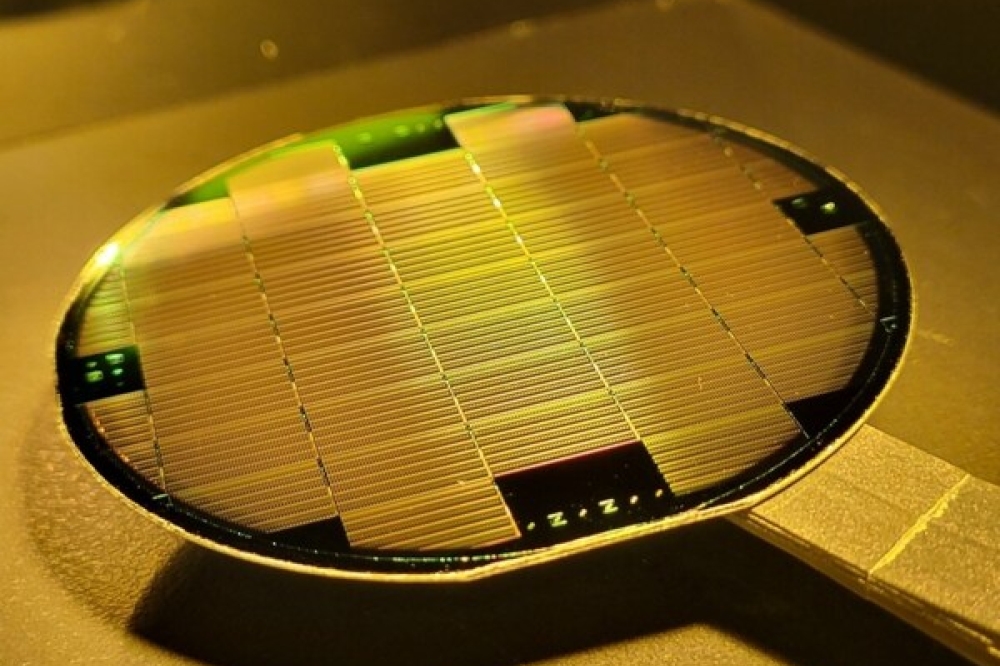

According to Yole's 'Automotive Advanced Front-Lighting Systems' report, automotive lighting is evolving from a basic passive feature used in vehicles to help the driver to see the road in dark conditions to a new function where lighting becomes an active feature able to detect oncoming traffic and reduce glare.

As a result, the market research company forecasts the automotive lighting market will reach $38.8 billion by 2024, with 4.9 percent CAGR between 2018 and 2024.

ADAS emergence was driven by the development and the integration of sensors into cars, starting with basic functionalities and progressing to complex functionalities, such as infrared LED for rain sensor, radar for blind spot monitoring, cruise control, adaptive cruise control, camera for traffic sign recognition, or lane departure warning. “The integration of sensors in ADAS vehicles is mandatory,” demonstrates Yole’s team in the Automotive Advanced Front-Lighting Systems report.

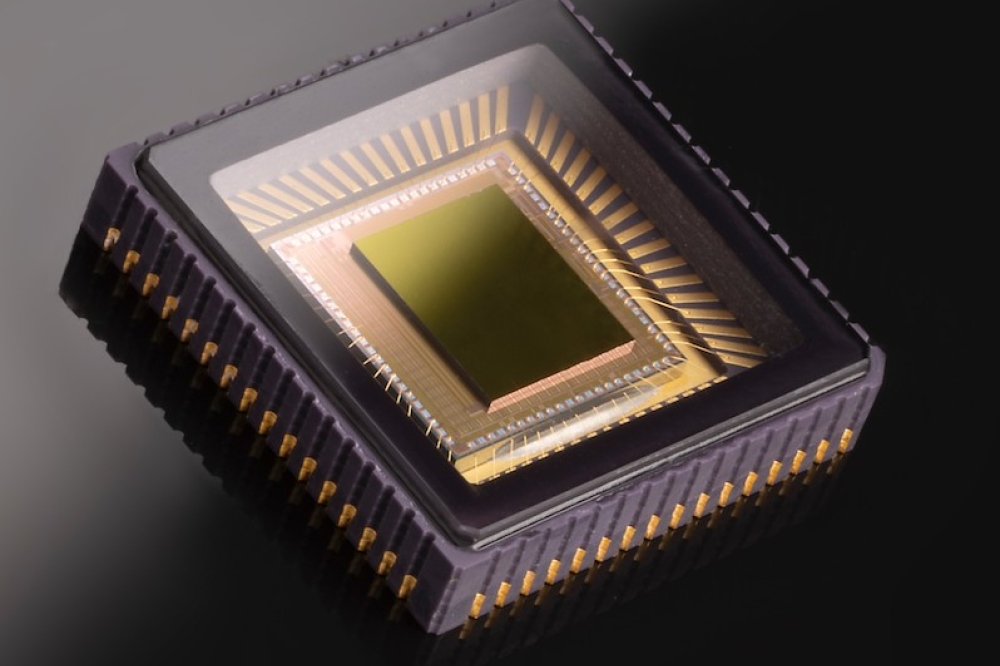

Furthermore, the development of advanced ADAS systems is linked with the development of new innovative sensors, that can be introduced into automotive, and data processing. Currently, ADAS using camera(s) have different levels of complexity: The basic level is ACC using camera, with the camera used to monitor the distance between two vehicles. The advanced level, with traffic sign recognition, where the camera must be able to read numbers, and the complex level, which corresponds to driver monitoring, where the camera is able to identify the eyes of the driver and detect drowsiness levels.

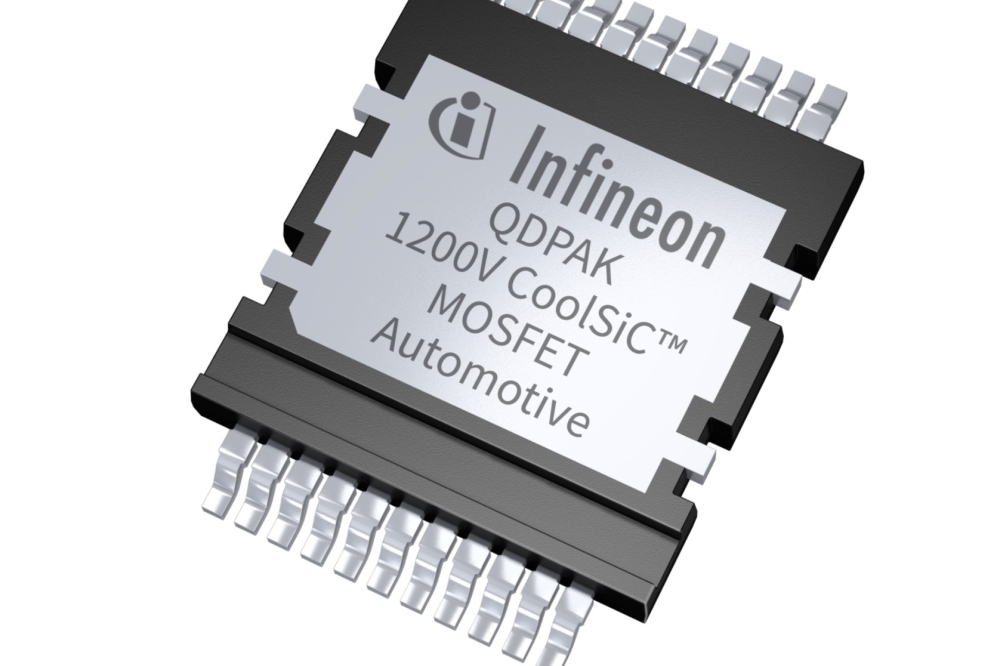

For Pierrick Boulay, technology & market analyst, solid-state Lighting at Yole: “The development of advanced ADAS functions means an increasing penetration of electronics into the vehicle. It started with one module for one function, but now some devices are multi-functional, like the front camera/s, and such evolution is also occurring at the sensor level, such as combination of the camera with radar”.

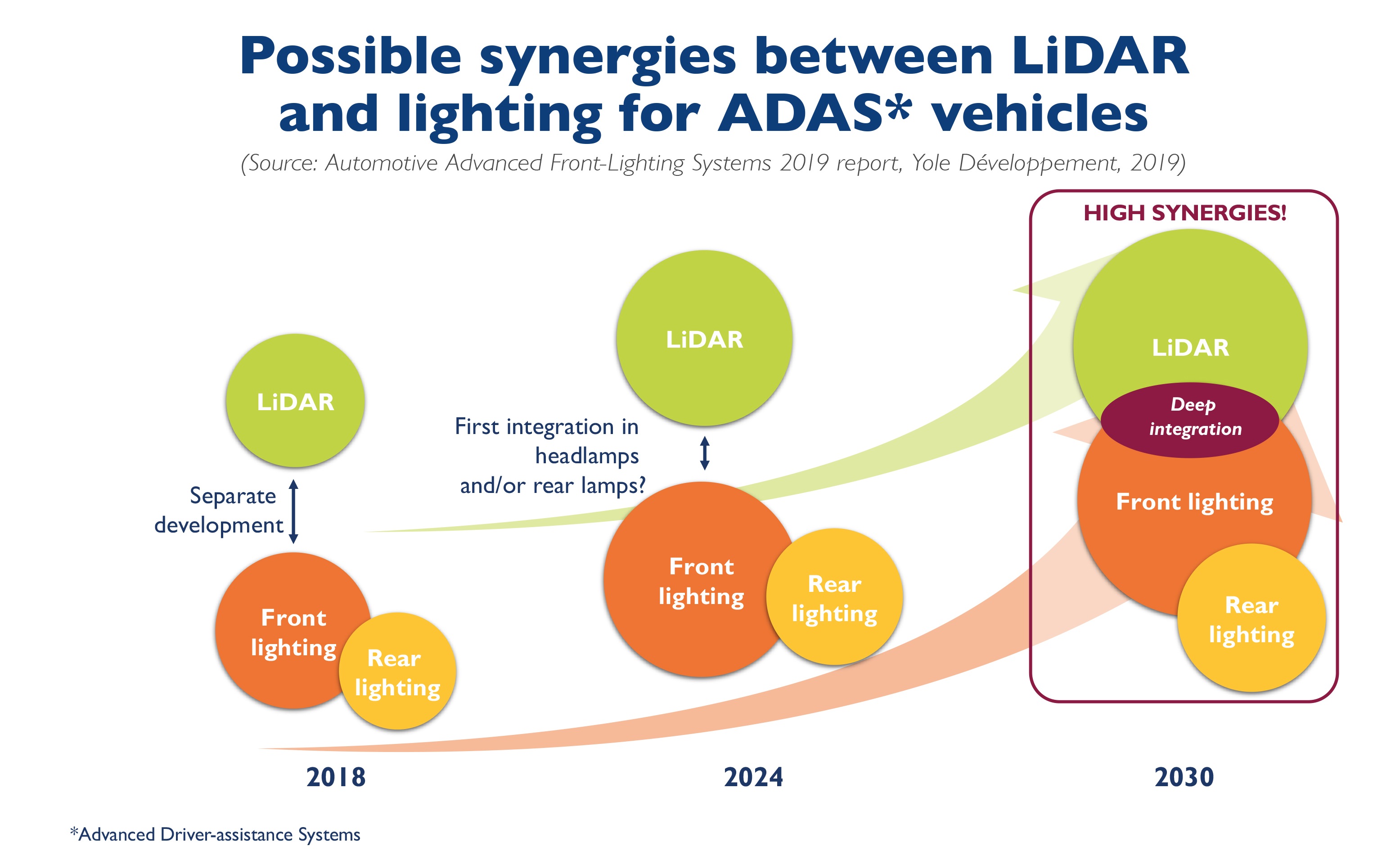

Increased integration of electronics is also benefiting exterior lighting applications. Indeed, sensors and related data collected can be processed for advanced lighting functions. A clear example is the use of the camera for high-resolution lighting where the camera is used to detect oncoming or preceding vehicles

Under this dynamic context of innovations, OEM are playing a key role. Martin Vallo from Yole comments: “OEM requirements were quite different one or two years ago, but now tend to homogenise as they better understand the technology.”

As an example, OEMs have several requirements at different levels in integrating LiDAR. First, the optical integration limits losses in range detection to 10 percent, and high level of transmission (90 percent) is required. Also, surface close to the normal of LiDAR installation to limit deviation. Regarding the thermal integration, LiDAR needs to operate in all conditions, so the sensor has to handle temperature above 95°C in specific situations. Management of internal humidity will be mandatory.

De-icing will be also problematic, and washing and heating system for the outer lens must be evaluated. Then, for shock and mechanical integrations, LiDAR is not easy to break, so in case of pedestrian impact effect on lower leg must be taken into account. Depending on the vertical field of view of the LiDAR, aiming system may be mandatory (mechanical dispersion, load, acceleration, braking).

Finally, the styling integration needs a compromise between performance, technical constraints and aesthetics. Integration of LiDAR can be done in two ways: invisible or visible, depending on whether the OEM wants to show its technology or not. A detailed analysis of innovative technologies has been made by Yole’s analysts in the automotive lighting report…