Predicting the future: Looking ahead 25 years

Our industry will have expanded its reach by the time Compound Semiconductor celebrates its fiftieth anniversary. Come that milestone, chips made by our industry will be behind: widespread air, water and surface disinfection; superior displays; incredibly efficient power supplies; and breath-taking data rates

BY RICHARD STEVENSON

I EXPECT THAT YOU, like me, are annoyed by the oft-repeated quip ‘gallium arsenide is the material of the future and always will be’. That view surely smacks of ignorance. Those that say such things are clearly unaware of the GaAs-based laser diodes in their CD and DVD players, the high-power variants used to cut and weld metals that form the skeleton of their cars, and the GaAs HBTs that amplify the RF signal within their smartphones.

However, while some of those working within the silicon industry may irk us all – whether we are part of the GaAs industry, or a sector involved in the manufacture of InP, GaN or SiC chips – we have to admit that we can learn a lot from them. The reality is that those of us that work in compound semiconductor fabs dream of replicating the maturity of their technology, their understanding of reliability, and their low levels of defects.

Another lesson we can learn from the silicon industry is that if we plot the rate of success to date and extrapolate this, we see what the future holds. It’s a point well-illustrated by Moore’s Law, a rate of progress that has been maintained for decades. Even when continual success seems unlikely, with further miniaturisation throwing up issues with no obvious solutions, progress has continued at historical rates.

We can also plot progress in our industry. This gives us the opportunity to not only look back at how far we have come, but see where we might be in the future. Using this approach we can take an educated guess at not only where the performance of compound semiconductor chips could be in the future, but also the advances in the technology they support.

In the LED industry, progress follows Haitz’s Law, which states that with the passing of every decade, the cost-per-lumen plummets by 90 percent and the output of a packaged LED climbs by a factor of 20. It is this rapid rate of progress in the bang-per-buck that has enabled white LEDs to move on from their first killer application of providing illumination in the handsets to backlighting screens and eventually providing general lighting. Over the next few years, further falls in the price-per-lumen will help LED lighting become the incumbent technology everywhere. Revenue for this sector is tipped to climb, but the LED light bulb is arguably now a commodity, offering razor-thin margins to chipmakers.

An emerging opportunity for visible LEDs is in displays made from microLEDs. This application, which could net billions and billions of dollars, will be helped by a fall in the cost-per-lumen. However, if this type of display is to become commonplace, a breakthrough is needed in the efficient mass transfer of the tiny chips that create the pixels. It’s a problem that has been grappled with for several years, and there are some promising solutions, such as eLux’s microfluidic technology – but more progress must follow if this is to become the next killer application.

LED on Mars

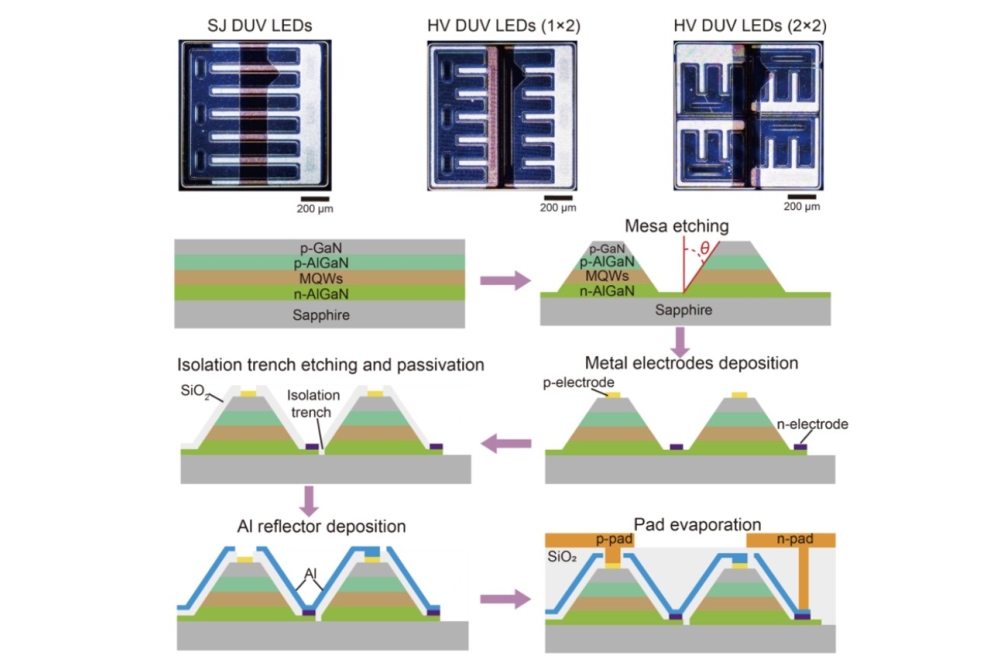

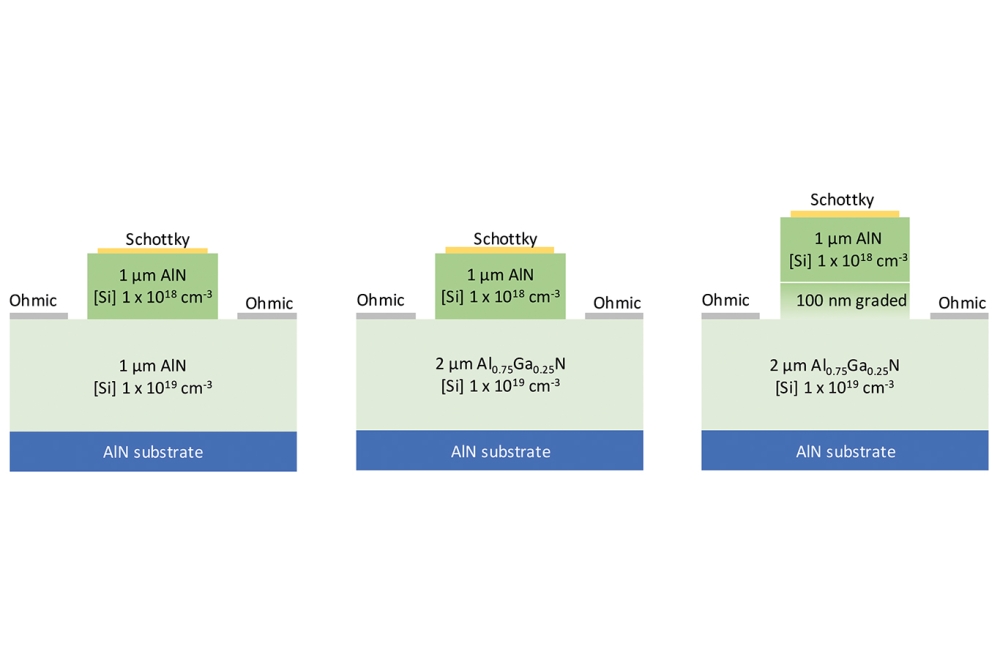

For deep-UV LEDs, if progress continues to follow Haitz’s Law, these devices will be everywhere by the time Compound Semiconductor celebrates its 50th anniversary. By 2045, packaged deep-UV LEDs could deliver several hundred watts of power, at a cost of just a dollar-per-Watt, thanks to improvements in the chip’s design, its package and the way it is driven.

If that happens, today’s incumbent source of deep-UV emission, the mercury lamp, will be relegated to museums. By then, the UV LED will be the dominant technology for purifying water and disinfecting surfaces and air, and it will also be deployed in numerous processes involving the curing of adhesives. Some devices may even be on Mars – NASA has manned missions slated for the 2030s, and even if there are delays, there is a good chance that within the next 25 years deep-UV LEDs will be purifying an astronaut’s drinking water on the red planet.

The coming decades should also witness a steady increase in the efficiency of blue lasers. While blue LEDs can operate with wall-plug efficiencies exceeding 70 percent, today’s best single-mode blue lasers are only operating in the high thirties, and their multi-mode variants in the mid-forties. Both figures are on the rise, and even if the rate of improvement is not maintained in the coming decades, efficiencies could still exceed 70 percent by 2045.

What would be the implications of such efficient emitters, which have the added benefit of easing thermal management? There is sure to be a rise in the use of these sources for processing copper and other yellow metals, because these materials have an absorption sweet-spot in the blue. In comparison, when today’s more common infrared lasers are used to process yellow metals, this leads to sputtering and ultimately inferior welds.

In addition to a hike in sales for material processing, driven by the growth in electric-vehicle production, blue lasers are tipped to see increasing use in colour projectors. Insufficient brightness has held back sales, but the opportunity to dispense with a backlit screen is a major asset in both the home and the office. Efficient green lasers are also needed. While they lag those in the blue, they are also improving, and they don’t need to be quite as efficient, because they operate at around the eye’s most sensitive wavelengths.

Given the merits of on-wafer testing, a circular emission profile and a very high modulation rate, the next 25 years should witness growth in shipments and spectral coverage of the VCSEL. Over the next few years, infrared variants will gain greater deployment in smartphones, where they support facial recognition, and further ahead in vehicles, providing a light source for LiDAR. GaN-based blue and green VCSELs are still in development, but there is no doubt that they will move into production, probably initially as sources for car headlights. By 2045, they should have entered other markets, possibly including displays.

Multi-junction missions

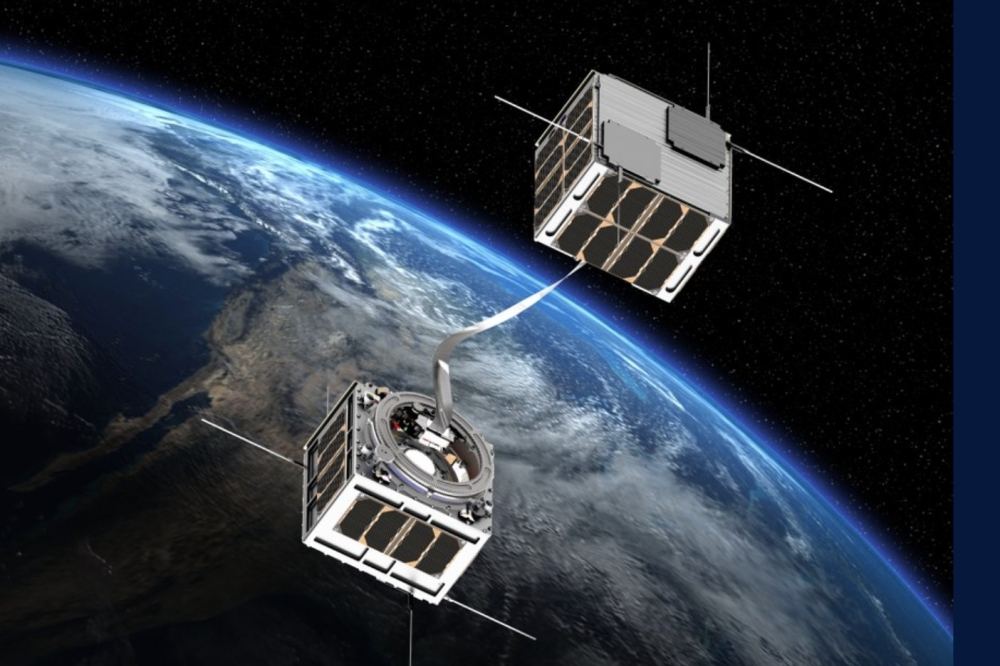

In the next few years many satellites are going to be launched. Contributing to this hike is the SpaceX Starlink programme, aiming to create a network of 12,000 small satellites by 2027. The satellites will form the backbone of global broadband internet.

Such efforts will provide much business for makers of multi-junction solar cells. Although these devices are pricey, the higher efficiency and great robustness to radiation allow them to monopolise this market. The efficiency of these cells is increasing, but gains are not easy to come by. One option currently being explored is to concentrate sunlight, an approach that can deliver a substantial gain.

Efforts at concentrating sunlight previously offered much promise for the creation of a terrestrial industry, before hopes were dashed by a dummy whammy: plummeting silicon prices; and a financial crisis, which starved fledgling firms of much needed investment. Far fewer teams are now battling for the bragging rights that come from having the most efficient cell – a potential solution to cutting costs – but records continue to rise. Leading the way is a team from NREL, pursuing a six-junction design. This device has an efficiency of just over 47 percent, and if its resistance can be reduced to that found in four-junction variants, this architecture could break the 50 percent barrier.

Further gains are possible, with values of just over 60 percent a realistic upper limit. Given the slow progress with silicon cells, that would be more than double the record value for the incumbent technology. But even an advantage that significant is not necessarily going to lead to a re-birth of the terrestrial CPV industry, which is in a catch-22 situation. For costs to come down there needs to be economies-of-scale, alongside refinements coming from insights associated with significant production volumes. Could that come from CPV in space? If it does, and the power generated per unit area comes to the fore as a key metric in sunny climes, CPV stands a chance of being a big industry by 2045.

SiC, GaN and gallium oxide

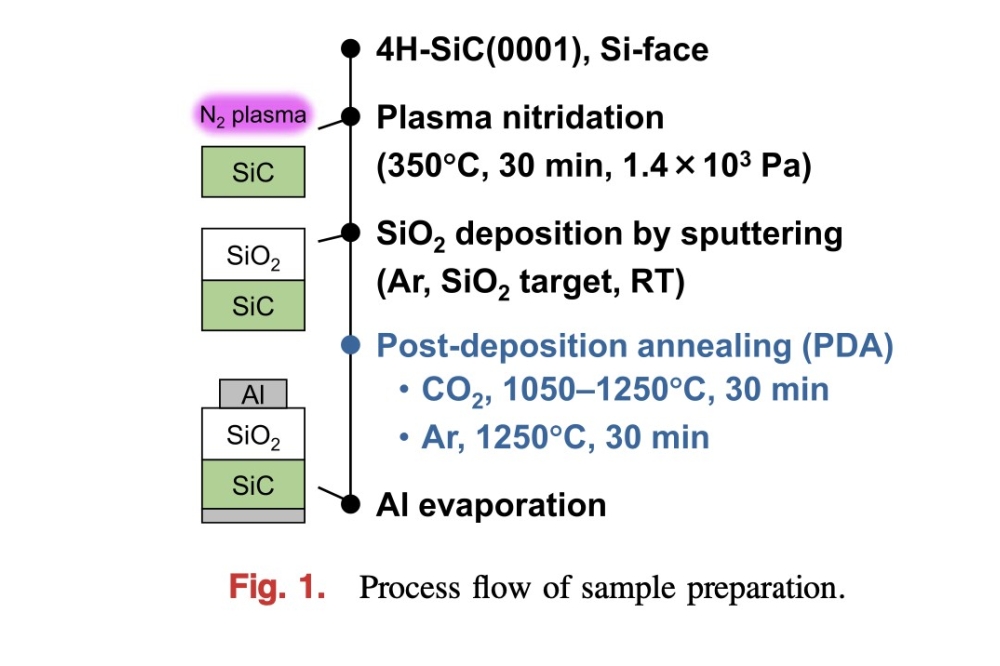

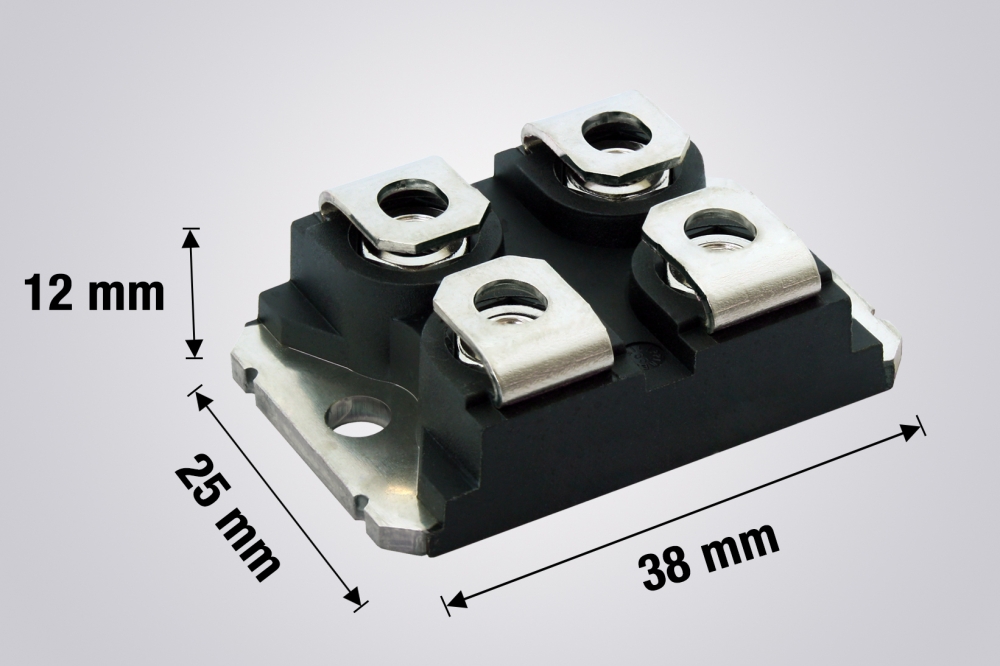

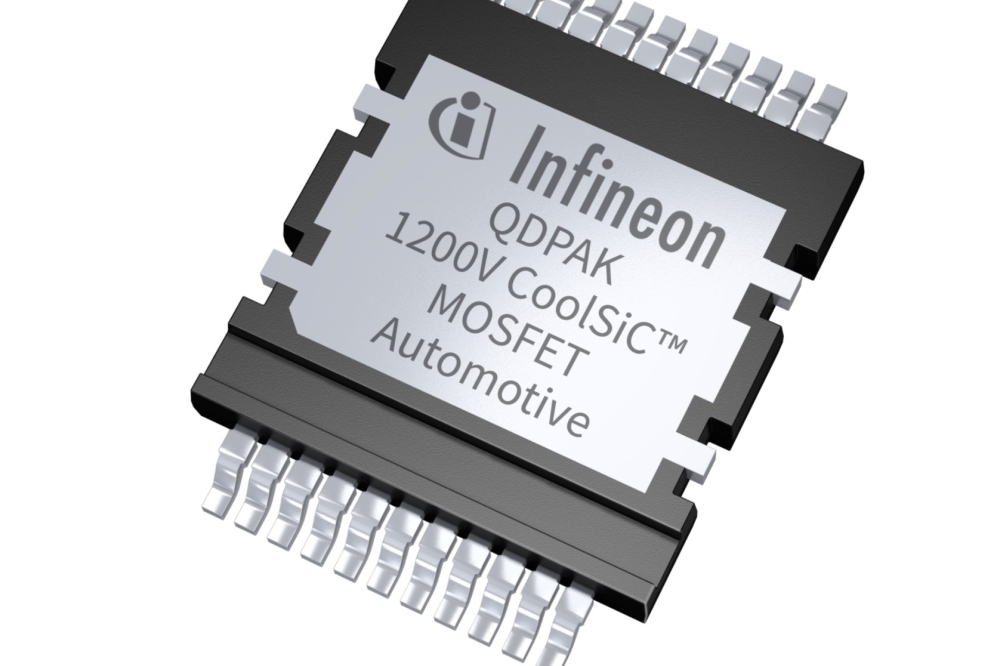

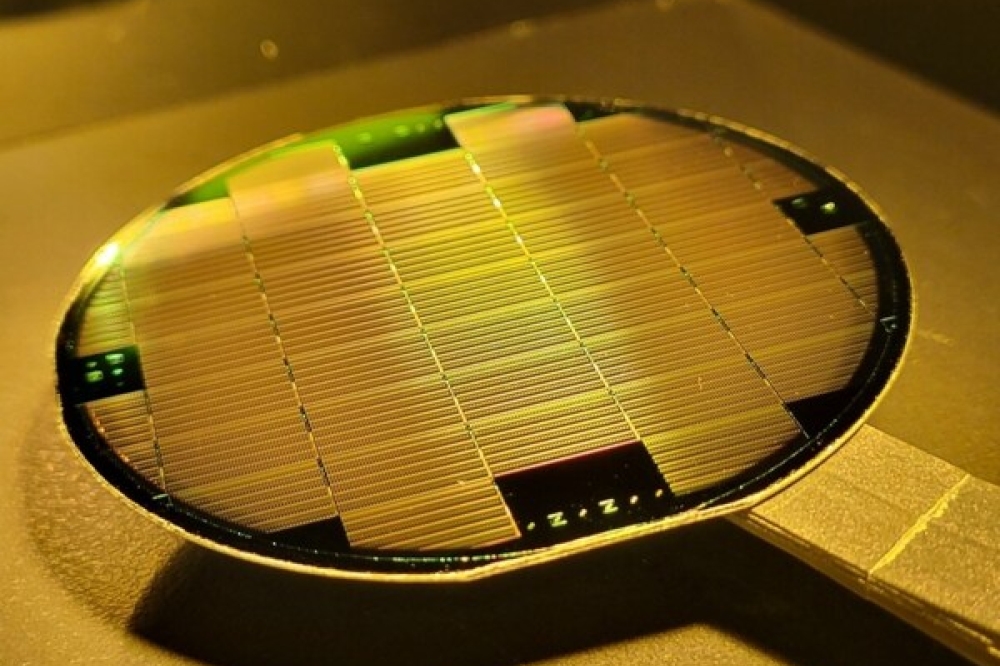

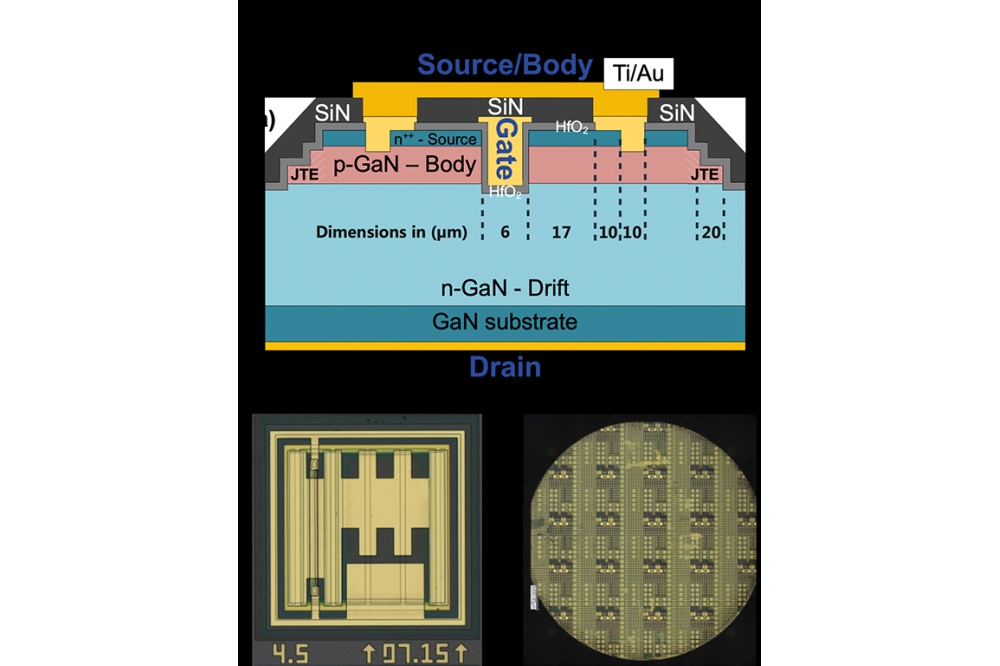

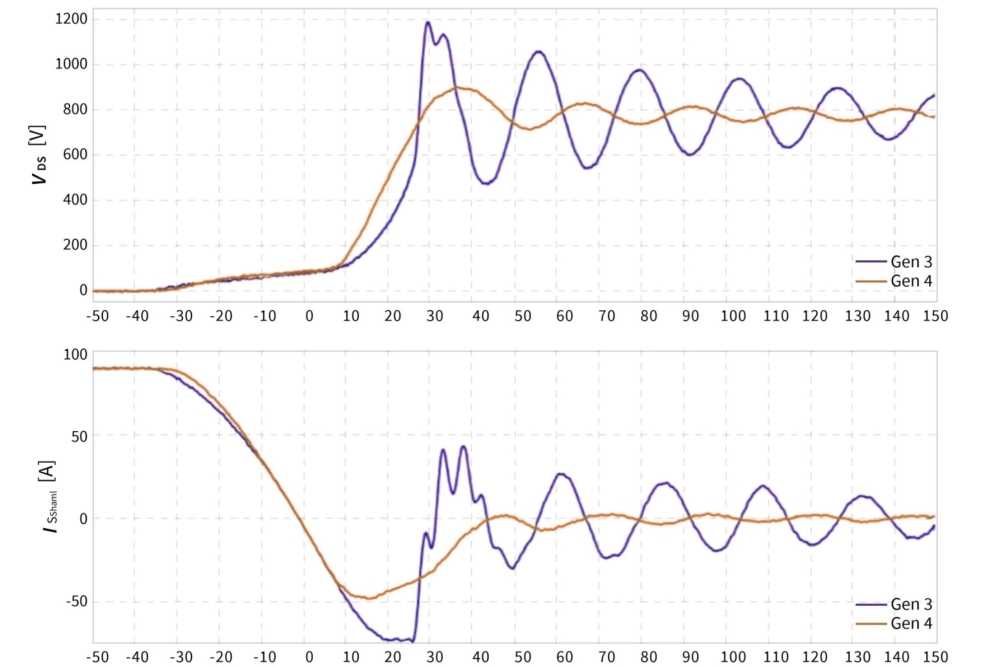

In the wide bandgap industry, progress is fast, spurred on by Cree’s $1 billion investment in SiC that will increase its capacity by a factor of 30 between 2017 and 2024. Many of its SiC devices will be deployed in electric vehicles, which will become more common as governments all around the world look to trim their carbon footprints. Shipments of GaN-on-silicon power devices are also tipped to take off. Operating at lower voltages – initially 600 V and below – they will enable an increase in the efficiency of numerous forms of power supply. Further ahead, GaN CMOS should start to appear, leading to further gains in circuit efficiency, realised with smaller, lighter-weight units.

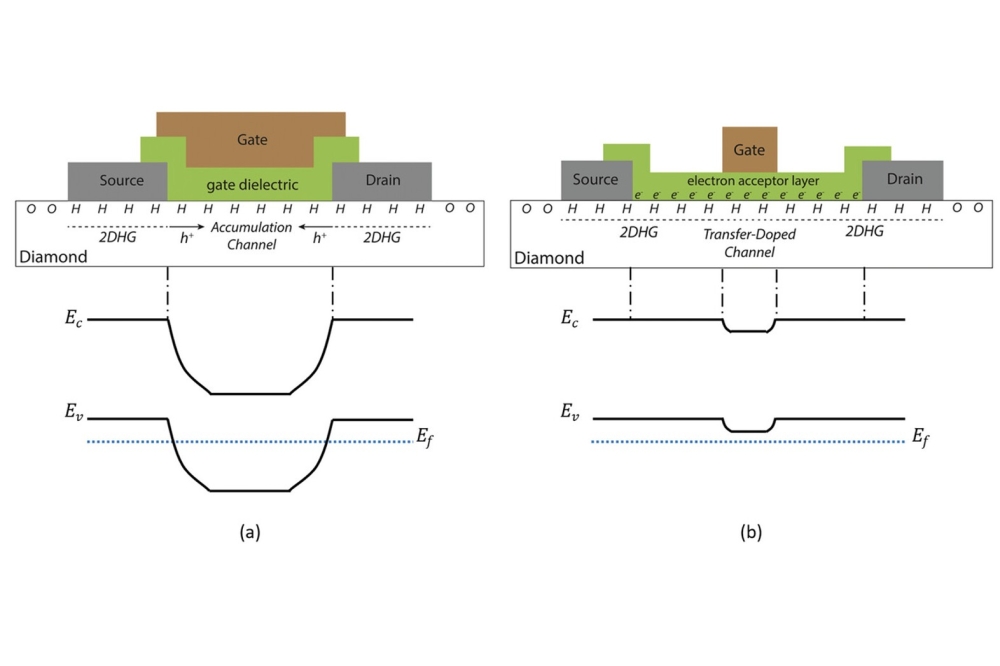

However, by 2045, these middleweights – and that is how we should look at GaN and SiC – could well be in decline. By then, they could be overtaken by gallium oxide, a heavyweight with many attractive attributes. Gallium oxide’s potential performance, judged in terms of Baliga’s figure-of-merit, is better by an order of magnitude, and the cost of manufacture will be competitive. Unlike SiC and GaN, native substrates can be grown by melt-based techniques, allowing production of competitively-priced devices with great crystal quality. Like GaN, transistor performance is impeded by difficulties in realising p-type doping, but engineers can turn to a similar trick to ensure success: use the work function of the gate to pinch off an array of fin channels. Another issue with gallium oxide is its low thermal conductivity, but again, the solutions adopted with other compounded semiconductor devices offer routes to success. By following in the footsteps of other parts of the wide bandgap industry, it is possible that gallium oxide devices will take less time to get to market, and undergo a faster ramp to production volumes.

The shape of 7G

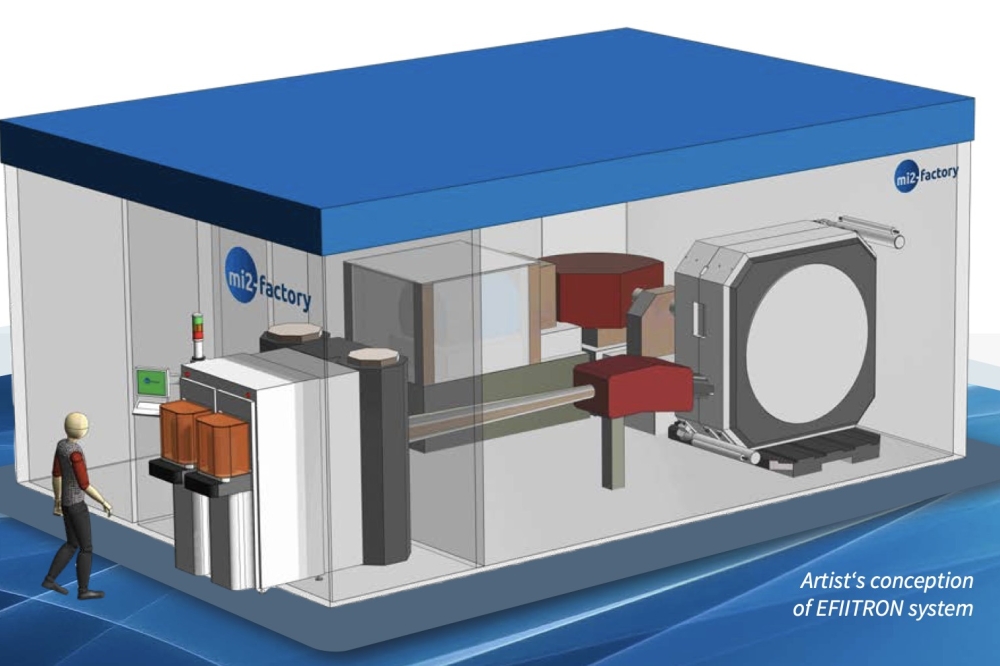

Based on historical rates of progress, which indicate that it takes about a decade to migrate from one generation of mobile technology to another, we should have 7G well established by 2045. If this happens, one can envisage incredibly fast data rates, possibly at hundreds of gigabits per second, using transmission in the terahertz domain.

But is it inevitable that we shall have this technology? Some well-informed sceptics suggest not, offering a range of compelling arguments based on market economics. Many of us are upgrading our smartphones far less frequently than we used to, because the latest models offer only incremental benefits – maybe a better camera, or water-resistance. Often we are reluctant to pay high fees for call plans, and so it’s hard for carriers to grow their revenues. The problem is essentially that as time goes on, we expect more while paying less. With that mindset at play, carriers should be reluctant to invest in any new generation of technology. And this concern should be magnified, because the roll-out of successive generations of technology is now getting more expensive. New generations of mobile technology operate at higher frequencies, where attenuation is higher. As increasing the transmission power is not a viable option – regulators are unlikely to approve, and a hike in power would be detrimental to battery life – the solution is to go to smaller cells, and this increases roll-out costs.

One may also wonder why anyone would need these breath-taking transmission speeds. It makes no sense to watch a broadcast in ultra-high-definition on a portable device with a small screen. Maybe ultra-fast data rates would be used for remote robotic surgery, but that is hardly a mainstream application. So, if most of us can’t see the need for super-high speeds, why would we ever be willing to pay extra for them? The threat is that carriers will never recoup their investment.

But maybe we are not looking at this in the right way. Back in the 1990s, when the much-lamented Word 5 provided the jewel in the Microsoft suite, there didn’t seem any need for more computational power, either in speed or memory. But if we were to try and use those machines today, they would feel very clunky and fail to cope with our high-res photos, digital downloads and so on. The extra levels of computational performance have been used in ways that we value today, but had not been envisaged.

Right now, the Covid-19 pandemic has shifted the world of work. While we might now get into the office, if we attend a conference, it’s probably involving logging on and listening to presentations. It’s a poor second to being there, as we can’t interact with the same level of intimacy as before. For example, we can’t pick up on body language. However, suppose we had ultra-fast communication, offering a more immersive experience involving some form of virtual reality. This could be so beneficial that companies would be willing to pick up the tab for the connection, reasoning that it offered a good return for an outlay far less than plane tickets and hotel bills. It would also help to trim carbon footprints, an issue that may be taken more seriously in the 2040s.

If 7G does roll out before this magazine celebrates its fiftieth anniversary, it will surely be good news for our industry. Whether communication occurs high in the gigahertz realm, where there is plenty of space, or in the terahertz domain, compound semiconductor devices are sure to features in handsets and infrastructure. GaAs may still dominate, but it could lose out to InP, the king of speed within the III-Vs.

Successes in this sector, plus those in power electronics, satellites, displays and disinfection, suggests that even those in the silicon industry currently failing to appreciate the benefits of compound semiconductors today should have started to change their mind well before 2045. Compound semiconductor devices are already pervading society, and in 25 years’ time the role they play will be even more significant.