Improving reliability... the journey so far

BY BILL ROESCH FROM QORVO

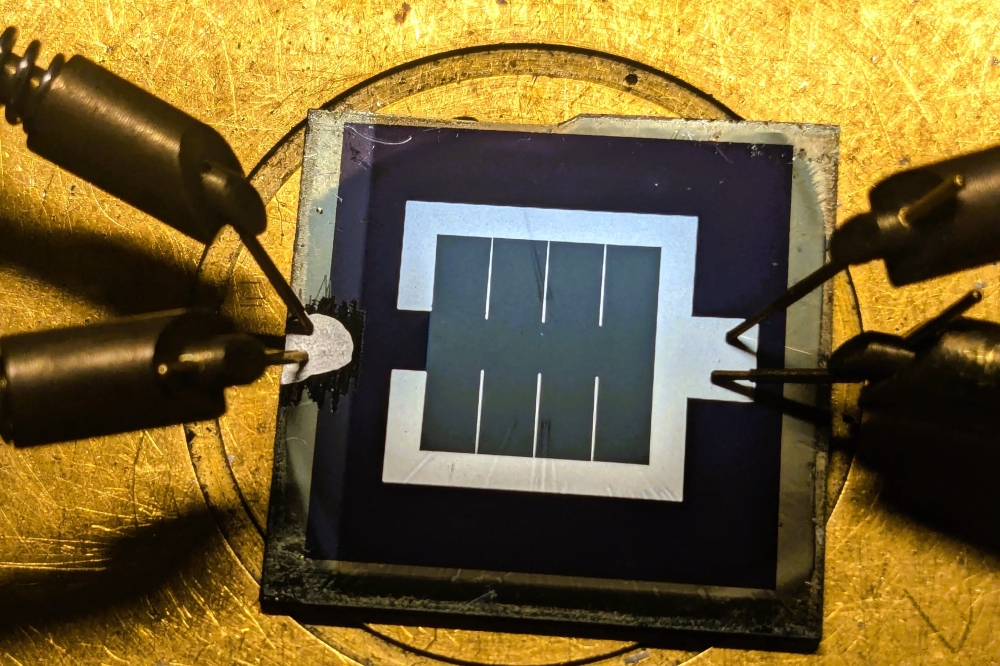

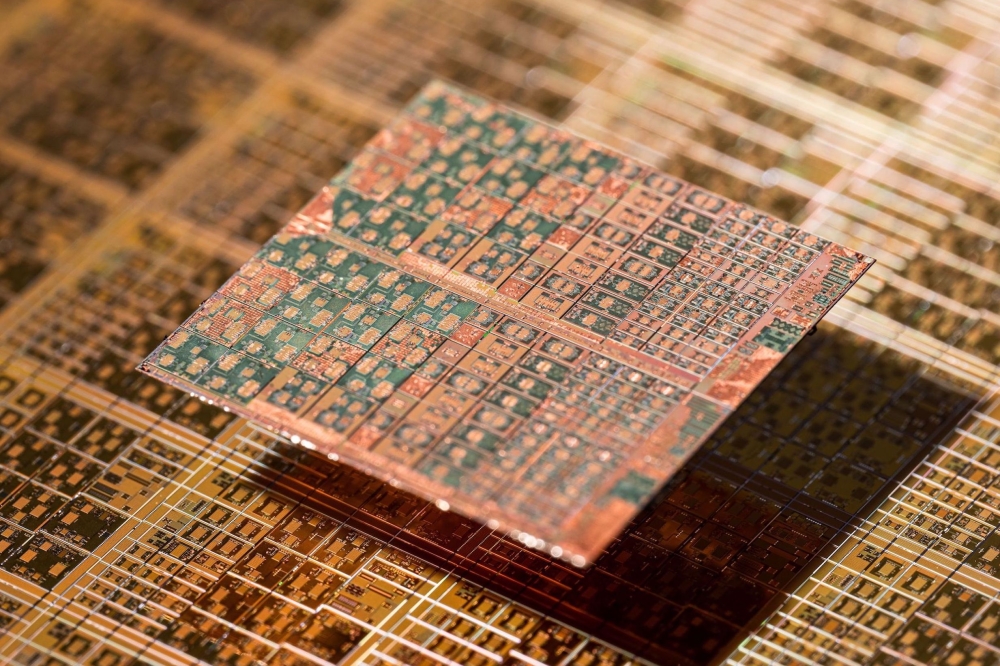

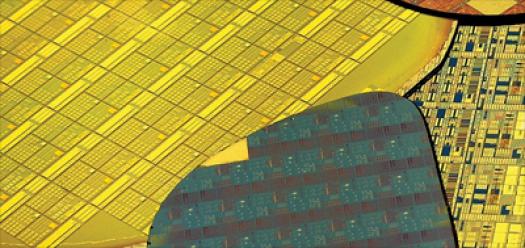

In semiconductor fabs everywhere, there are engineers that are amassing and tormenting the ghostly skeletons of cell phones. The handsets that they interrogate have no cases, no screens, no processors, no memories, no batteries "“ they are just a vacant shell of the phone's radio section, often containing only a single piece of what wonderfully connects the phone to the rest of the world.

It is in these laboratories of torture that engineers evaluate nearly every type of component that makes a phone mobile; from switches, to filters, power amplifiers and everything in between. One dark truth of semiconductors, exposed and interrogated in these stress facilities, is that eventually even the very best products wear out. How long the integrated circuit can survive, however, is not the sole concern of the engineer "“ recently, they are also focusing their attention on the very small fraction of devices that could fail unexpectedly early.

For the compound semiconductor chips that lie at the heart of many mobile phones, amplifying signals that are transmitted to the local base station, the reliability journey has taken the same path as that for silicon, albeit with a delayed start.

One of the biggest challenges for any engineer trying to fully comprehend the reliability of III-V products is that it is deceptively difficult to understand circuit degradation. That's because it doesn't just depend on how customers use a product, but also on the intrinsic properties of each part "“ what it is made of and how those materials respond to wildly varying conditions. In addition to the materials; the package, the method of fabrication, the design, and the lay-out of the circuit can all influence reliability. Complicating matters further, it is getting tougher to estimate a product's infant fallout.

These difficulties arise against a backdrop of a Moore's-law-driven age, where electronic components are expected to get ever smaller, lighter, cheaper, more powerful and more efficient. To do this, studies are made, considering new materials and design techniques. All of this is great for innovation, but it can be terrible for reliability.Moore versus Johnson

An interesting, insightful perspective on the differences between silicon and the III-Vs can come from considering two papers from 1965. One of these is very famous "“ the article by Gordon Moore in Electronics, where he speculated about cramming more components on an integrated circuit, and discussed the now famous "˜law' that began the microminiaturization age. Lesser known is the piece by Edward Johnson in his company's RCA Review, where he discussed a figure of merit in the paper Physical Limitations on Frequency and Power Parameters of Transistors. Johnson argued "an ultimate limit exists in the trade-off between the volt-ampere, amplification, and frequency capabilities of a transistor". Like Moore, he had a theory that aimed to predict trends of future semiconductor developments.

Although there is not even a single mention of reliability in Johnson's publication, there is no doubt that he predicted that silicon devices deliver a superior performance to those made from germanium. One of the ingredients in the figure of merit is the bandgap, which accounts for claims that GaAs performs better than silicon, but is outclassed by GaN.

Meanwhile, in Moore's paper reliability was prominent. He may have been fixated on cost, but he did discuss material performance aspects, writing: "Silicon is likely to remain the basic material, although others will be of use in specific applications. For example, gallium arsenide will be important in integrated microwave functions."

Despite the difference in impact between Moore's Law and Johnson's Figure of Merit, both are referenced 50 years later "“ with the particular citation depending on the preference for either microminiaturization or performance. However, integration and performance cannot stand alone, because if reliability is missing, the game is over.

Compound semiconductors are not new. Researchers have been studying and experimenting with the likes of GaAs, InP, and GaN well before Moore and Johnson made their claims in 1965. However, the ensuing decade brought focus on initial reliability for silicon.

At that time, the Japanese had pressed quality to the forefront, setting the bar for automobiles and electronics. Shrinking eventually pushes reliability to the limitations of the materials, so to keep pace with Moore and the Japanese, semiconductor materials had to change. Compound semiconductor folks, on the other hand, were fixated on performance, with material changes underpinning gains in performance. The difference between technologies was cast.

Semiconductor companies proliferated between 1975 and 1985. Intrigued by Johnson's performance promises, governments funded GaAs development programmes, helping to create more than a hundred labs throughout the world in the 1980s.

For those working with silicon, the focus clearly included quality, and at this time GaAs was at least a decade behind "“ it was still proving viability, while trying to carve out a business niche that could unlock the door to mirroring the growth and profitability of silicon. Fast-forward to 1985 to 1995, and the reliability of compounds attracted attention, with efforts measuring lifetimes and showing that compared to silicon, GaAs was good enough. By now, the silicon industry had pushed through reliability difficulties, and identified the path that GaAs must follow.

For those engineers measuring semiconductor wearout, life was generally straightforward "“ they would simply stress the device until it fails. The three challenges that they faced were: determining the failure mechanism and degradation that defines a failure; measuring the distribution of time to failure; and understanding what stress is applicable, so that it is possible to evoke the failure mechanism faster than in normal use.

The work of these engineers could begin by obtaining an estimate of life "“ this is relatively easy, as all that it requires is to stress a single sample until it degrades. Estimating the variation of the lifetimes is not much harder, for it only requires replacing one sample with several. But determining a way to make a device fail faster is more challenging. Success on this front came in 1992, when Bob Yeats, working at Agilent Technologies, showed how to estimate an acceleration factor from a single GaAs MESFET.

The increased emphasis on reliability from 1985 to 1995 made this the decade of catch up for GaAs. Study after study, lifetest after lifetest, and product after product demonstrated adequate reliability for consumer, industrial, automotive, and even aerospace demands.

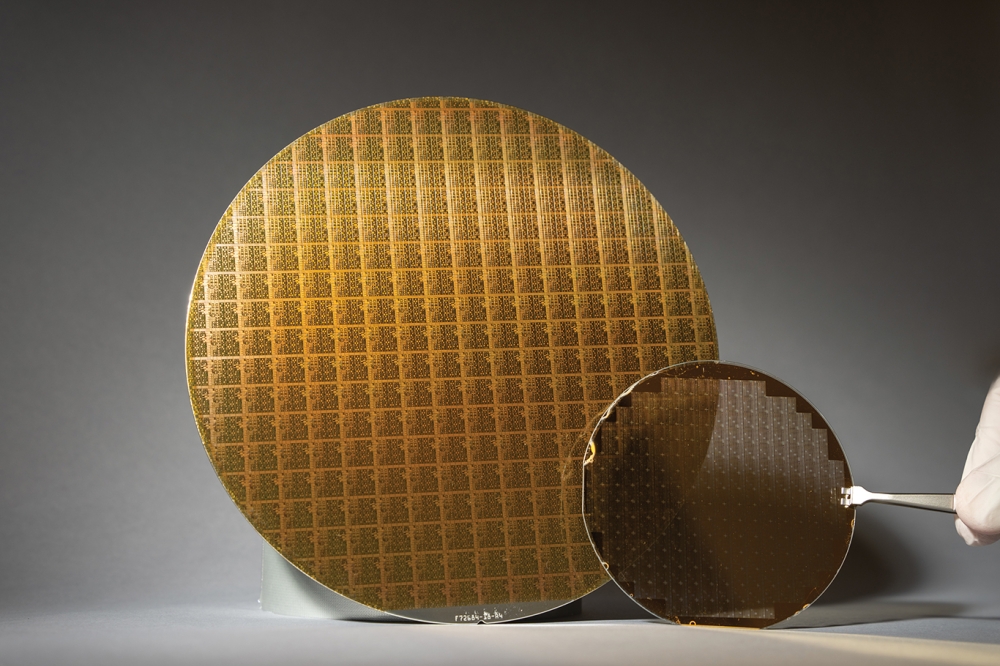

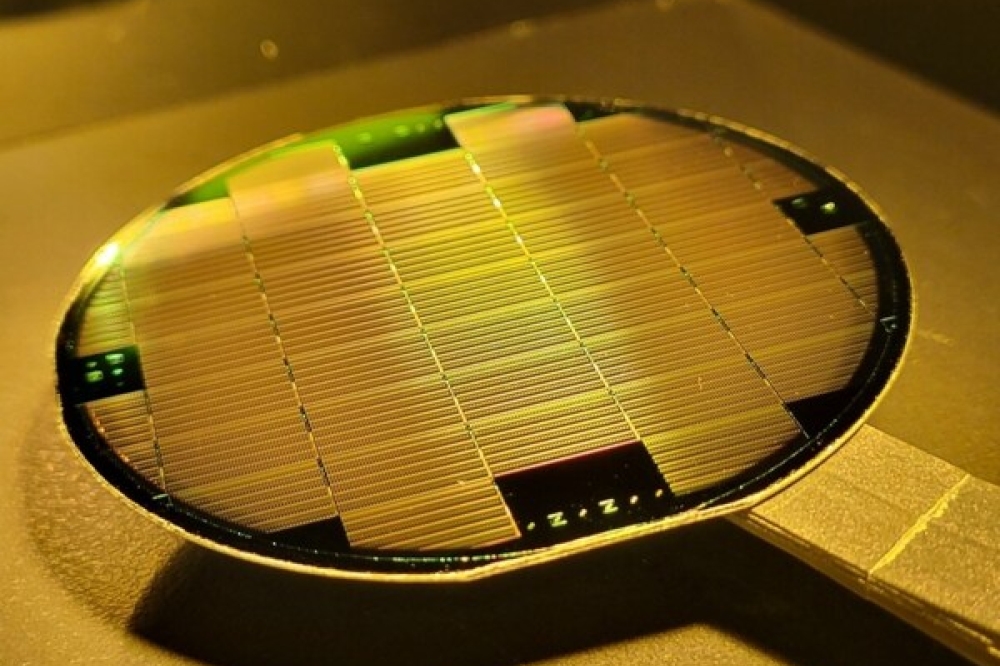

From 1995 onwards, efforts at estimating time to failure were combined with those to determine fallout distributions. Up until then, reliability studies had been limited to sample sizes of 1-100, and torture times of 1-1000 hours. But Walter Poole from Anadigics set an entirely new benchmark for the scale of a study of fallout distribution, interrogating 12,000 samples for 12,000 hours. This helped compound semiconductor labs to emerge as bona fide fabs − but the reliability of silicon wasn't standing still.

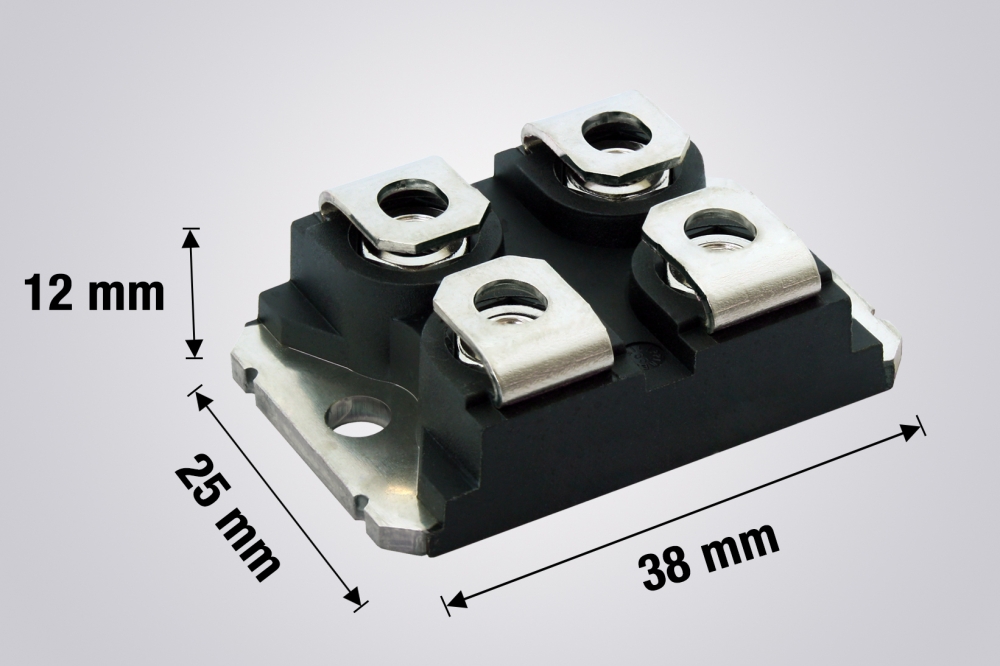

1995 was a bell weather year for compound semiconductors. Production volumes reached critical mass, with changes in product packaging arriving just in time. They were needed, because GaAs had been suffering from "˜hydrogen poisoning', an infliction that diminished reliability. The incumbent, high reliability hermetic packaging produced an unintended consequence, with hydrogen desorbed from the package absorbed in the transistor, where it impacted device characteristics. These changes in performance were noted in aerospace applications and in long haul fibre channel distribution systems"¦ all caused by hydrogen released from metal (typically kovar) employed on the lid or within connections inside of ceramic cavity packages.

The solution was both obvious and non-trivial. Mainstream silicon devices "“ both commercial and industrial − had already moved to smaller surface-mount-plastic, epoxy-moulded packages, so switching to this was appealing. However, it was not so easily utilized for high-speed, power-guzzling digital GaAs.

Nevertheless, the hydrogen phobia of 1989-1994 fuelled the switch to plastic for all but the most demanding applications. And in those particular cases, installing hydrogen getters mitigated the problem. Another milestone from 1995 was that this year marked the beginning of the end for digital GaAs. Cray ran out of time when massively parallel machines surpassed the computing power of his speedy machine, foretelling a fate that followed just a few years later with personal computers "“ where faster bus speeds, enabled by GaAs clock chips, gave way to faster and wider microprocessors.

However, back in the 1990s, the real champion of performance and reliability for compound semiconductors were the optoelectronic components that underpinned the beginning of the "dot-com" era in 1995. At that time fibre optic infrastructure exploded. System capacity doubled every six months, with the deployment of InGaAsP lasers and InGaAs photodiodes enabling the construction of links operating at up to 10 Tb/s. To cram thousands of communication channels onto the fibre, engineers turned to GaAs and then InP multiplexors and demultiplexors. This provided one of the first opportunities for compounds to reach production volumes that broke out of laboratory status and then to demonstrate reliability levels rivalling silicon.

Finally, in the late 1990s, Moore's 1965 prediction for GaAs came true. Driven by integration and miniaturization of cell phones, compound semiconductor reliability advanced like no other previous consumer gadget known to mankind. The first fashion phones came into being and a re-emphasis on quality and reliability followed. Makers of phones and their consumers redefined reliability with an expectation of seeing no failures "“ either in the handset factories or during the consumer's warranty period.

For the engineers working in the fabs, these expectations for components with longer and longer lifetimes led to an emphasis on finding and removing early fallout. By the end of the 1990s, phone-based annual volumes for III-Vs topped hundreds of millions, and reliability sampling and stressing exceeded millions per year. At this time, super heterodyne phone receivers evolved into digital radios − and the formative reliability methods of understanding mechanisms, distributions, and acceleration factors were enhanced by efforts to engineer reliability improvements and build in reliability throughout the communications sector.

Beating hero with zeroDuring the last ten years, the most exciting development in compound semiconductor reliability has been the shift from measuring wearout to quantifying early life failures. Taken to the extreme, this is like swinging focus from reliability to quality, since the earliest chance to detect failure is at the first measurement. In the journey to eliminate fallout, it makes sense for quality and reliability to be blurred together.

In 2006, Nien-Tsu Shen from Skyworks brought investigations on early life measurements that had been conducted in the previous decades into the public domain in a paper Perfect Quality for Free?!?, presented at the Compound Semiconductor Manufacturing Technology Conference. Makers of power amplifiers responded to the challenge, coming together to devise new methods of measuring reliability. Of course, the driver for measuring and reducing early failures is higher volume "“ but this was not an issue, with spending on phone microchips eclipsing that on computer microchips in 2011, and enabling III-Vs to finally find their niche.

Detecting early life fallout is far more challenging than estimating wear-out lifetimes because it is rare and getting scarcer. Of course, every semiconductor will eventually wear out − it just has to stressed hard enough and/or long enough to make an estimate of its life. But with mature technologies, early failures will occur in very few samples. Most failures are linked to defects, and occurrences of these are in a few parts per million.

One of the benefits of volume is that it drives down defects far better than anything else. The breakthrough in 1995 by Poole, who turned to 12,000 samples, enabled the detection of 100 parts per million. That's a rate of failure that is fine for the semiconductors of the 1980s and 1990s, but if it were occurring in 2014, it would have spawned around 4.5 million phone failures from last year's models! Now producers of GaAs chips in high volumes have (lower bound) early fallouts approaching 1 part per million, based on return rates measured over the earlier years.

In the current era, maverick events are behind the upper bound of early failure rates. An example, described in the January & February 2013 edition of Compound Semiconductor, involved 30,000 samples stressed by solder reflow simulations, thermal shock, and autoclave humidity saturation. This type of investigation provided unique information, because stressing continued until the samples stopped failing, and because mere detection wasn't the goal "“ the aim was to predict the occurrence and cumulative degree of fallout. In this case, the accumulated death toll was 0.5 percent, but the decreasing rate of failure throughout early life was the silver lining, demonstrating that the earliest measurements offer the best chance for detection.

What this means is that whether your measurement is described as quality, early life fallout, maverick anomalies, infant failures, or zero defects, this early assessment of reliability is just as critical as the electrical performance of a "˜hero' device that

stretches to the limit of Johnson's Figure of Merit.

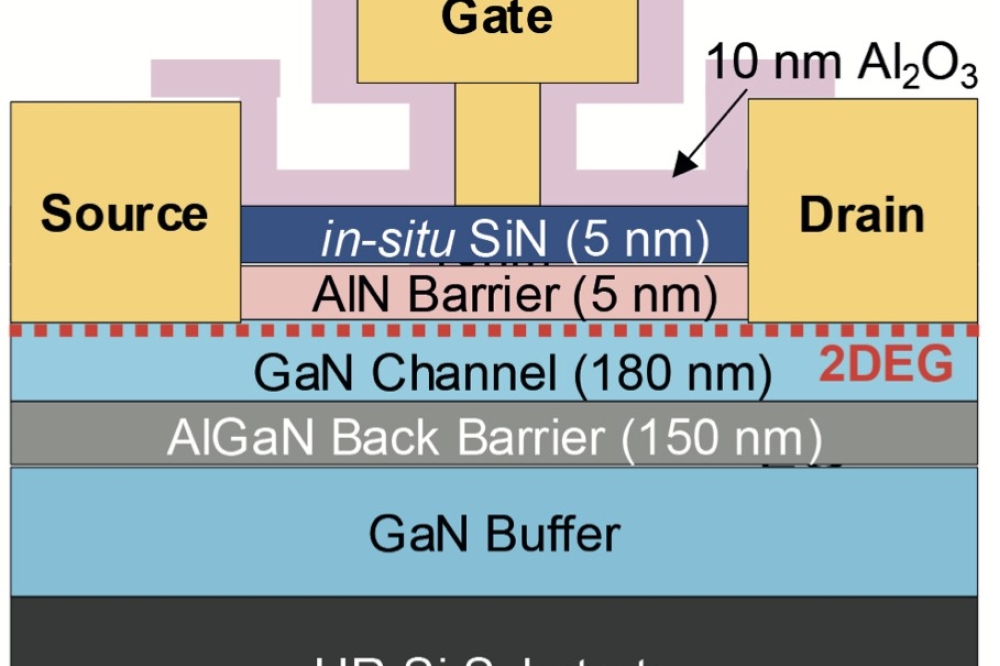

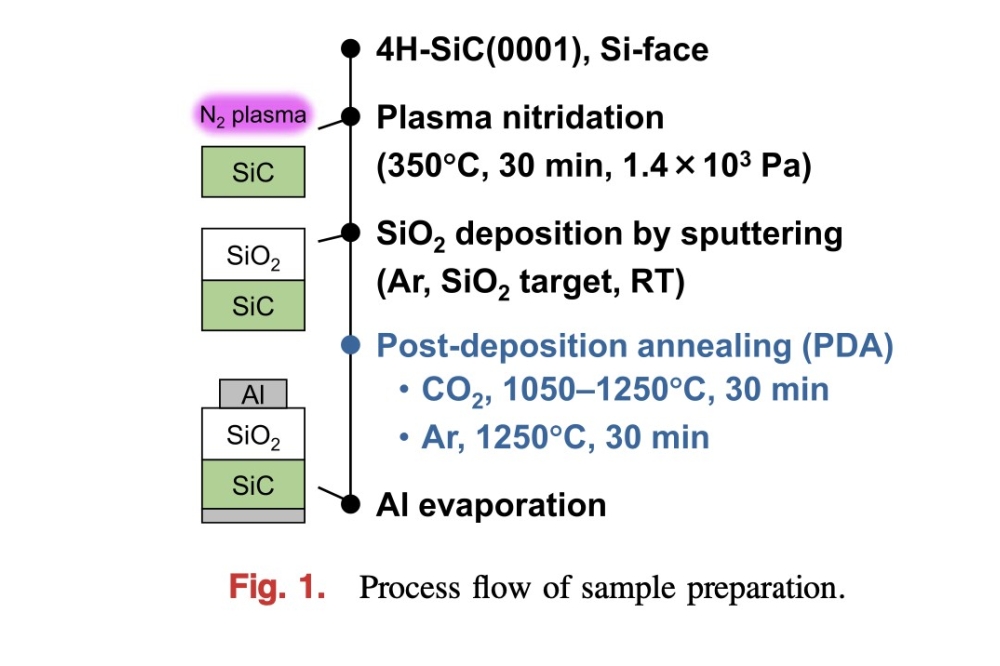

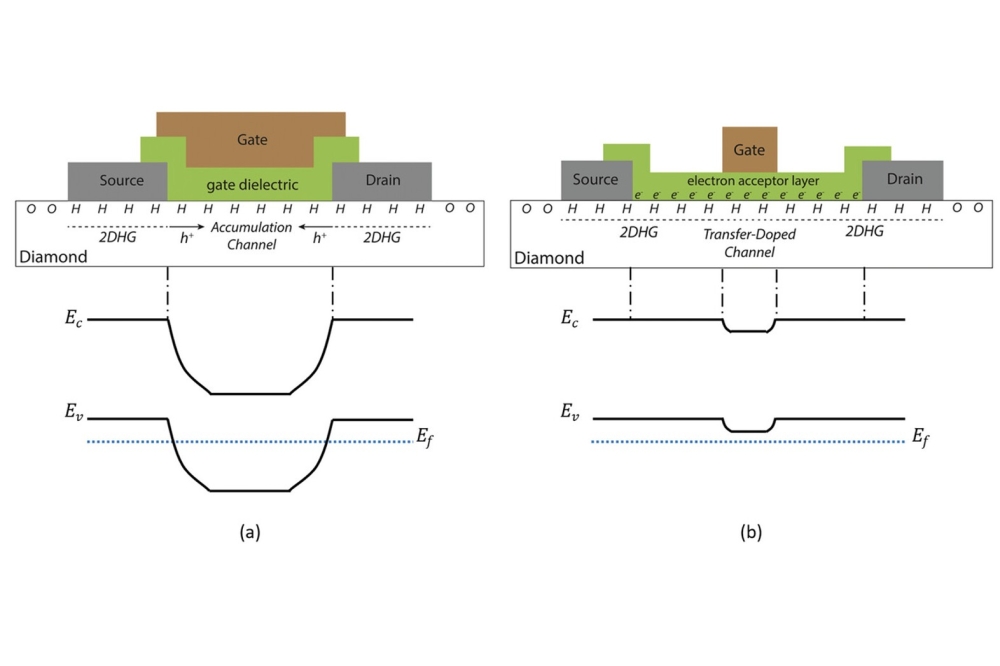

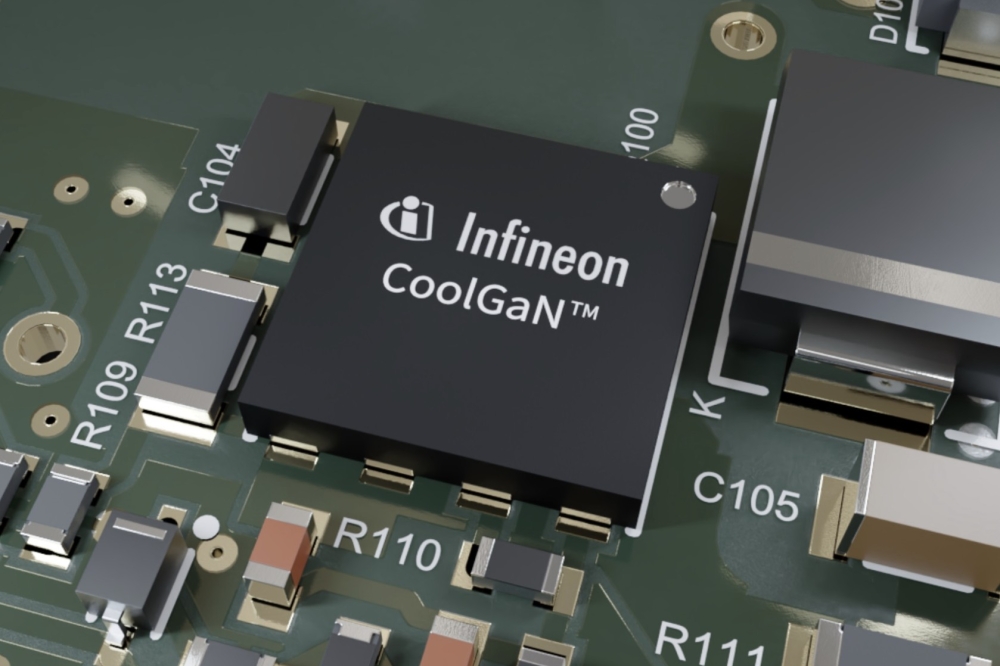

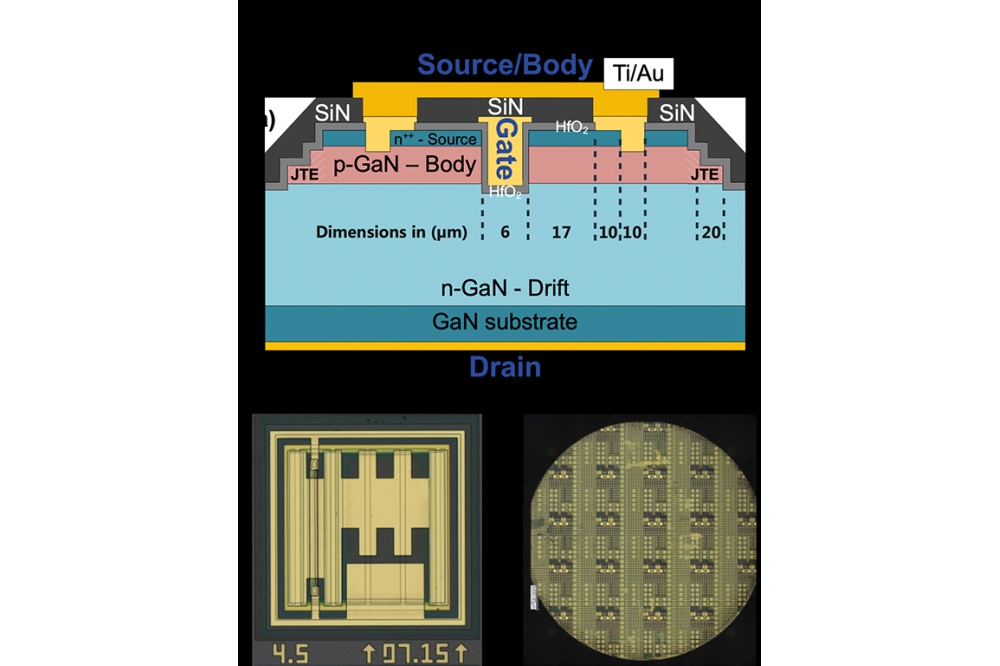

For the most promising material of our time, GaN, reliability publications surfaced in 2002. Since then, there has been increasing interest in this material from government and academia. One would have expected that this technology would have delivered accepted lifetimes five years on from then, based on the reliability learning curves for silicon and compound semiconductor materials.

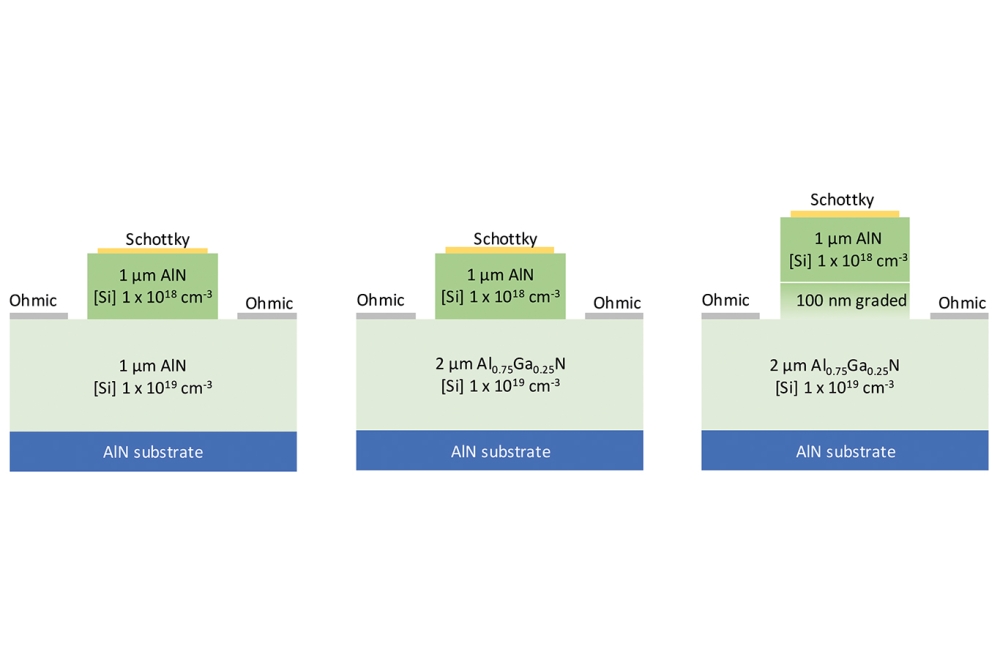

That's not been the case, however, with GaN reliability disputed and intensely debated to this day. This state-of-affairs is highlighted by the number of reliability publications on GaN published by the IEEE, which, since 2010, outnumbers those on GaAs by a factor of four. The "˜building-in' reliability era for GaN has been problematic, with the material itself proving influential. Historically, adding more aluminium into the structures boosts performance, but at the expense of inferior reliability.

Fortunately, the slowdown of GaN RF circuit reliability has not impacted all GaN developments. Consider, for example, what has happened of late with the GaN LEDs that are lighting our world.

It is interesting to note that the silicon juggernaut has now developed a problem with reliability lifetimes. Since the introduction of the 180 nm node at the turn of the millennium, circuit lifetimes have plummeted, falling from 20 million hours to 200,000 hours for the 65 nm node reached in 2007. Now, the latest ICs sport transistors at the 14 nm node, and the reliability penalty to pay for this (if any) is yet to be determined.

What this might mean at even smaller nodes is hard to say, for at nodes below 10 nm there is a need to introduce a new material "“ III-Vs have been mentioned as a possible saviour! Wouldn't it be ironic if the predictions of Moore and Johnson come together after 50 years to make both prophecies come to fruition, with reliability driving what neither could achieve independently?