Praying mantis eyes promise better machine vision

Self-driving cars occasionally crash because their visual systems can’t always process static or slow-moving objects in 3D space. They’re rather like insects with monocular vision, whose compound eyes provide great motion-tracking and a wide field of view but poor depth perception.

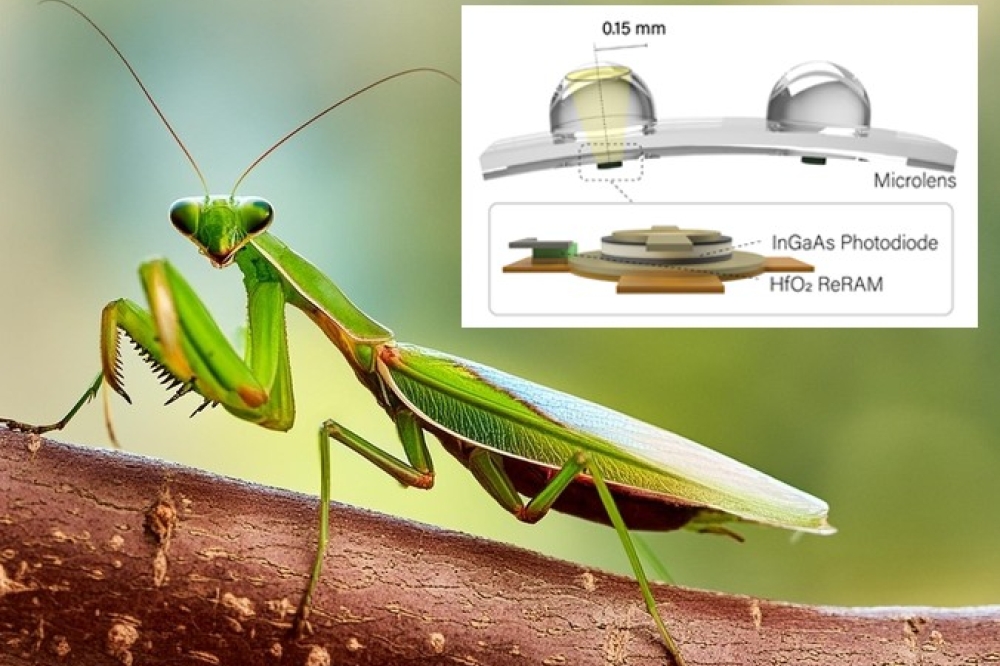

The praying mantis is an exception. Its field of view overlaps between its left and right eyes, creating binocular vision with depth perception in 3D space.

Now researchers at the University of Virginia School of Engineering and Applied Science have used these insights to develop artificial compound eyes that work faster and more accurately then today's vision systems.

Their paper 'Stereoscopic artificial compound eyes for spatiotemporal perception in three-dimensional space', appeared in the May 15 2024 edition of Science Robotics.

“After studying how praying mantis eyes work, we realised a biomimetic system that replicates their biological capabilities required developing new technologies,” said Byungjoon Bae, a PhD candidate in the Charles L. Brown Department of Electrical and Computer Engineering.

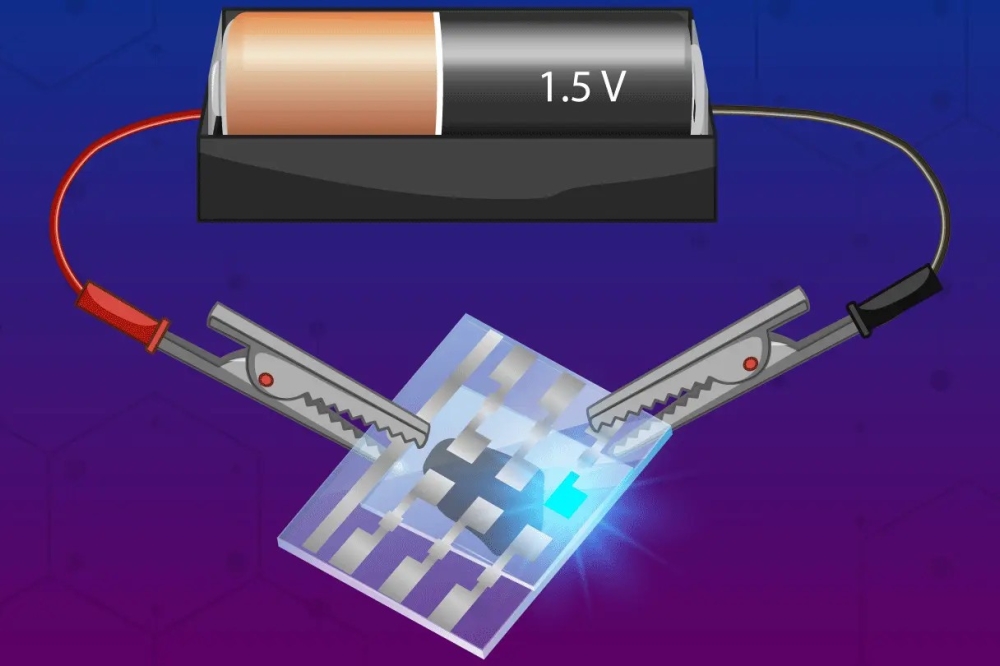

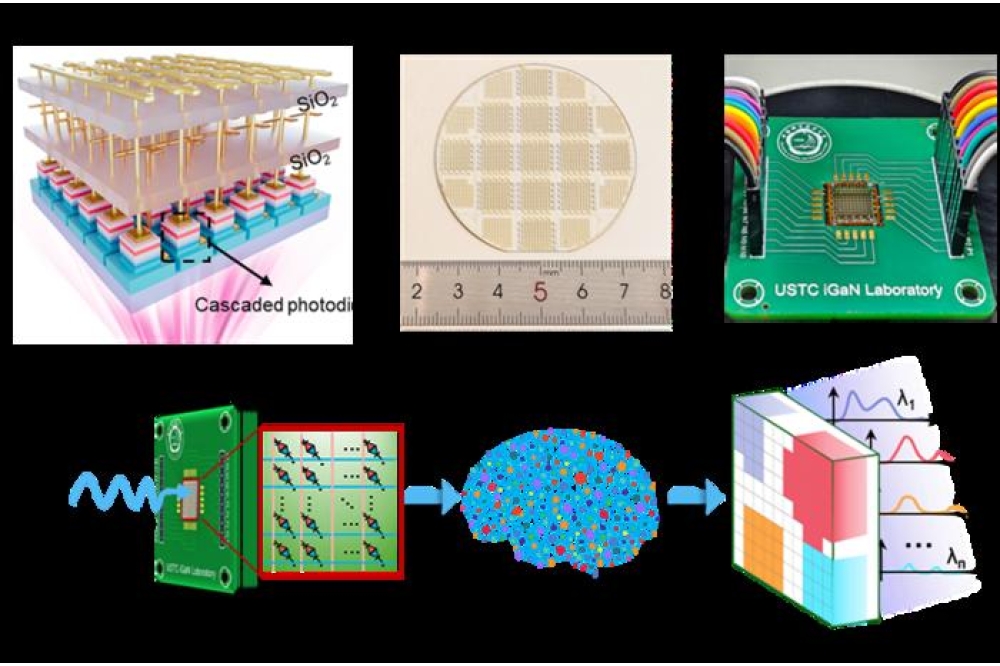

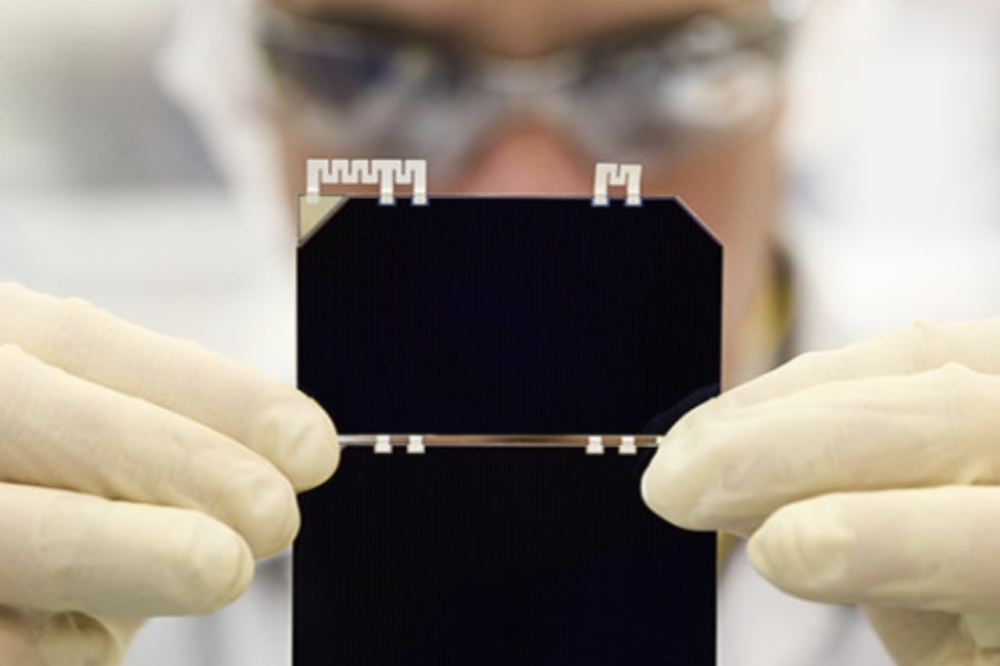

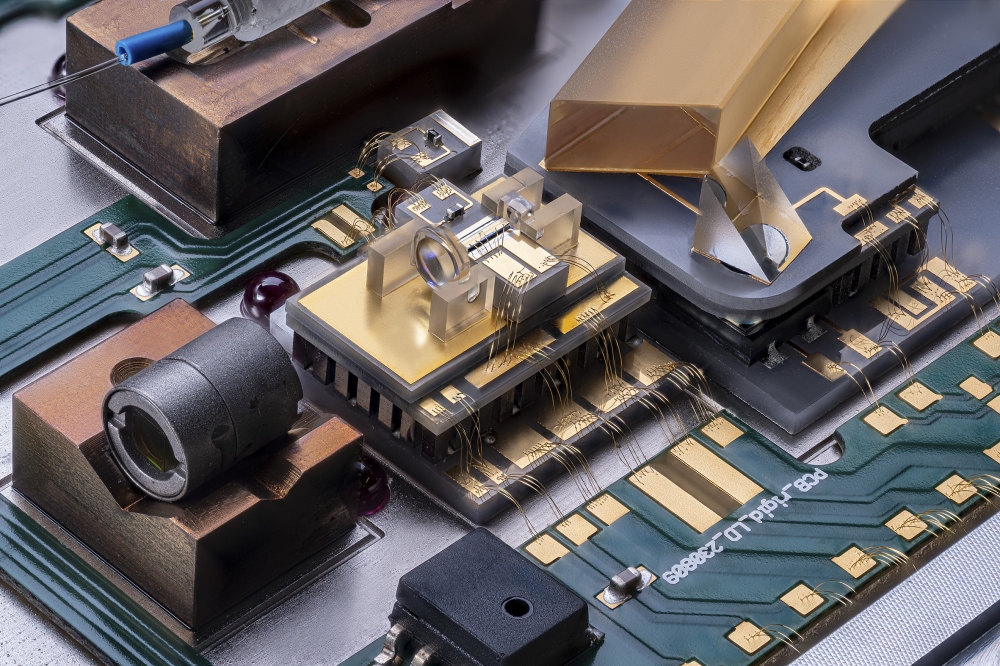

The team have integrated microlenses and multiple InGaAs photodiodes, which produce an electrical current when exposed to light. Flexible semiconductor materials emulate the convex shapes and faceted positions within mantis eyes.

The team used epitaxial liftoff technology to fabricate thin- film InGaAs photodiodes on a flexible substrate. These photodiodes were then paired with HfO2 –resistive random-access memory (ReRAM) units to create a one photodiode–one resistor (1P-1R)–based focal plane array (FPA) on a flexible Kapton substrate.

This configuration allows the optical signals received by the photodiodes to be modulated, effectively emulating pigment cells and receptors found in the arthropod visual system.

Subsequently, each pixel was combined with a poly- methyl methacrylate–based microlens array to improve focusing capabilities. Then, the fabricated FPAs were shaped into a hemispherical form with a 20-mm radius.

“Making the sensor in hemispherical geometry while maintaining its functionality is a state-of-the-art achievement, providing a wide field of view and superior depth perception,” Bae said.

“The system delivers precise spatial awareness in real time, which is essential for applications that interact with dynamic surroundings.”

Such uses include low-power vehicles and drones, self-driving vehicles, robotic assembly, surveillance and security systems, and smart home devices.

Bae, whose adviser is Kyusang Lee, an associate professor in the department with a secondary appointment in materials science and engineering, is first author of paper in Science Robotics.

Among the team’s important findings on the lab’s prototype system was a potential reduction in power consumption by more than 400 times compared to traditional visual systems.

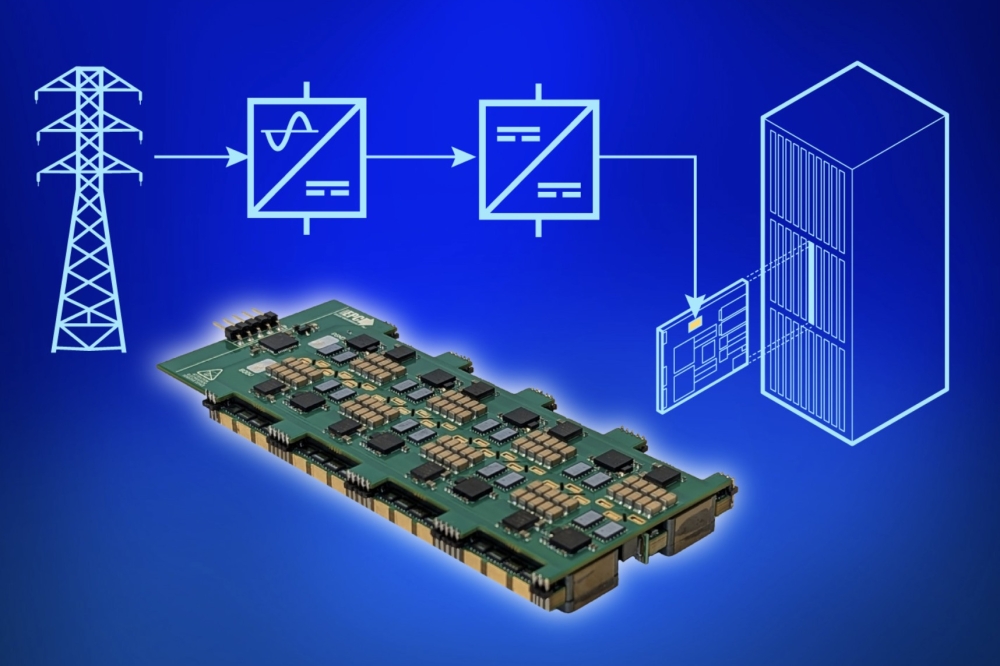

Benefits of Edge Computing

Rather than using cloud computing, Kyusang Lee’s system can process visual information in real time, nearly eliminating the time and resource costs of data transfer and external computation, while minimising energy usage.

“The technological breakthrough of this work lies in the integration of flexible semiconductor materials, conformal devices that preserve the exact angles within the device, an in-sensor memory component, and unique post-processing algorithms,” Bae said.

The key is that the sensor array continuously monitors changes in the scene, identifying which pixels have changed and encoding this information into smaller data sets for processing.

The approach mirrors how insects perceive the world through visual cues, differentiating pixels between scenes to understand motion and spatial data. For example, like other insects — and humans, too — the praying mantis can process visual data rapidly by using the phenomenon of motion parallax, in which nearer objects appear to move faster than distant objects. Only one eye is needed to achieve the effect, but motion parallax alone isn’t sufficient for accurate depth perception.

“The seamless fusion of these advanced materials and algorithms enables real-time, efficient and accurate 3D spatiotemporal perception,” said Lee, an early-career researcher in thin-film semiconductors and smart sensors.

This work was supported by US National Science Foundation and US Air Force Office of Scientific Research.