News Article

VCSELs and MEMS unite to enhance 3-D imaging

A new low power compact laser combining III-Vs and silicon could provide exceptional range for potential use in self-driving cars, smartphones and interactive video games

A new twist on 3-D imaging technology could one day enable your self-driving car to spot a child in the street half a block away, let you answer your smartphone from across the room with a wave of your hand, or play “virtual tennis” on your driveway.

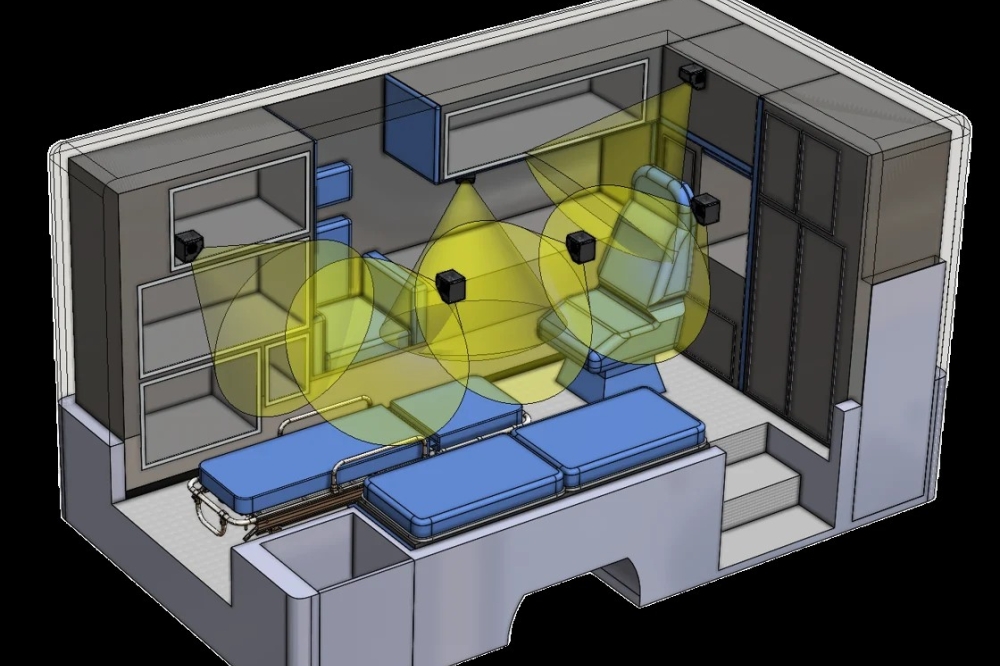

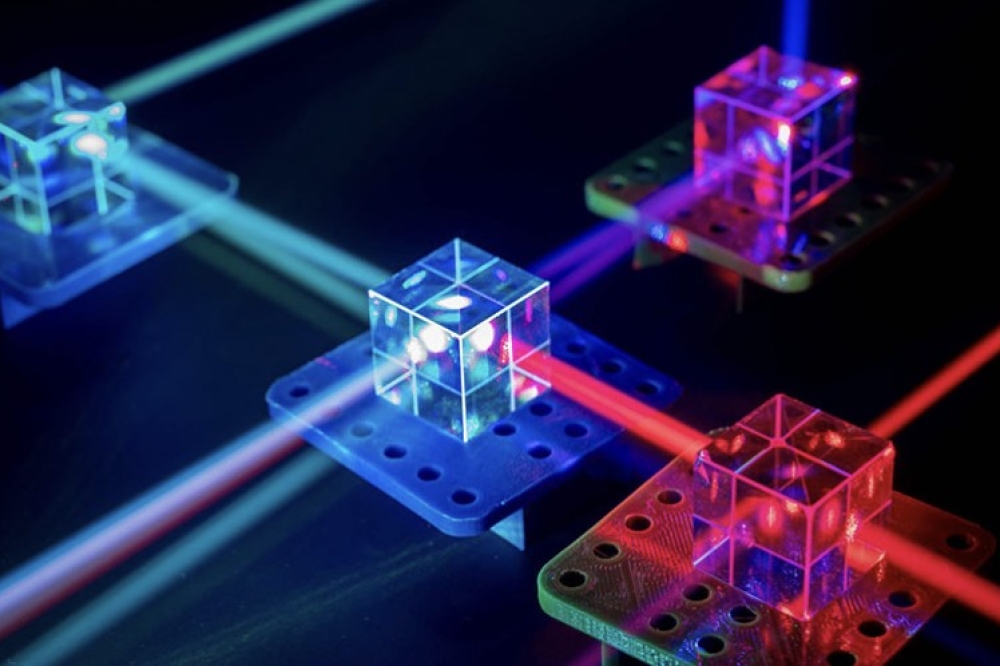

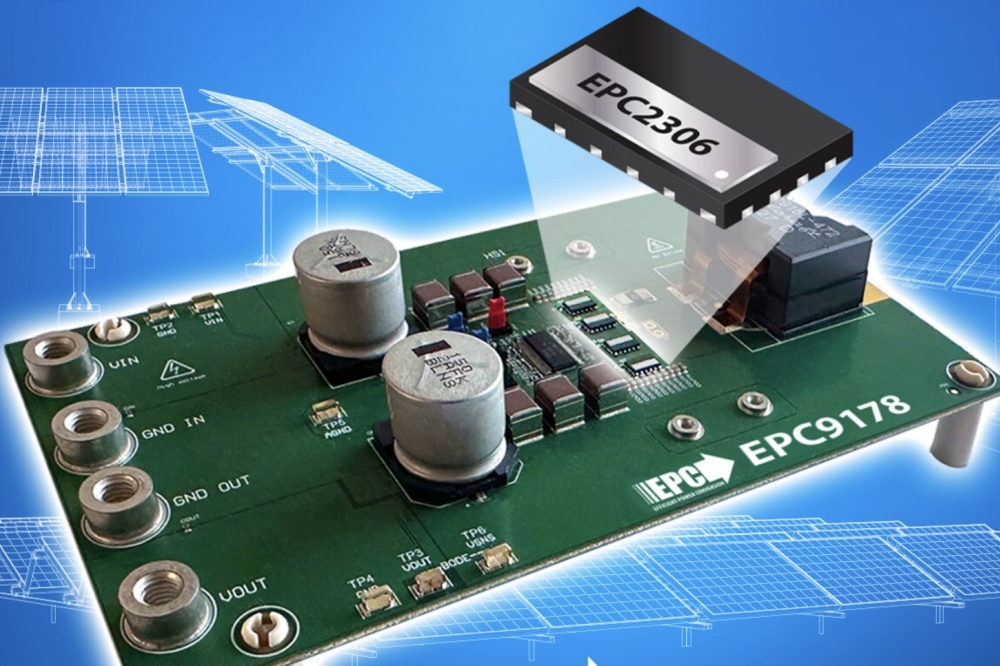

A conceptual vision for an integrated 3D camera with multiple pixels using the FMCW laser source (Credit: Behnam Behroozpour)

The new system, developed by researchers at the University of California, Berkeley, can remotely sense objects across distances as long as thirty feet, ten times farther than what could be done with comparable current low-power laser systems.

With further development, the technology could be used to make smaller, cheaper 3-D imaging systems that offer exceptional range for potential use in self-driving cars, smartphones and interactive video games like Microsoft’s Kinect, all without the need for big, bulky boxes of electronics or optics.

“While metre-level operating distance is adequate for many traditional metrology instruments, the sweet spot for emerging consumer and robotics applications is around ten metres,” or just over thirty feet, says UC Berkeley’s Behnam Behroozpour.“This range covers the size of typical living spaces while avoiding excessive power dissipation and possible eye safety concerns.”

The new system relies on LIDAR (light radar), a 3-D imaging technology that uses light to provide feedback about the world around it. LIDAR systems of this type emit laser light that hits an object, and then can tell how far away that object is by measuring changes in the light frequency that is reflected back. It can be used to help self-driving cars avoid obstacles halfway down the street, or to help video games tell when you are jumping, pumping your fists or swinging a racket at an imaginary tennis ball across an imaginary court.

In contrast, current lasers used in high-resolution LIDAR imaging can be large, power-hungry and expensive. Gaming systems require big, bulky boxes of equipment, and you have to stand within a few feet of the system for them to work properly, Behroozpour says. Bulkiness is also a problem for driverless cars such as Google’s, which must carry a large 3-D camera on its roof.

The researchers sought to shrink the size and power consumption of the LIDAR systems without compromising their performance in terms of distance.

In their new system, the team used a type of LIDAR called frequency-modulated continuous-wave (FMCW) LIDAR, which they felt would ensure their imager had good resolution with lower power consumption, Behroozpour says. This type of system emits “frequency - chirped” laser light (that is, whose frequency is either increasing or decreasing) on an object and then measures changes in the light frequency that is reflected back.

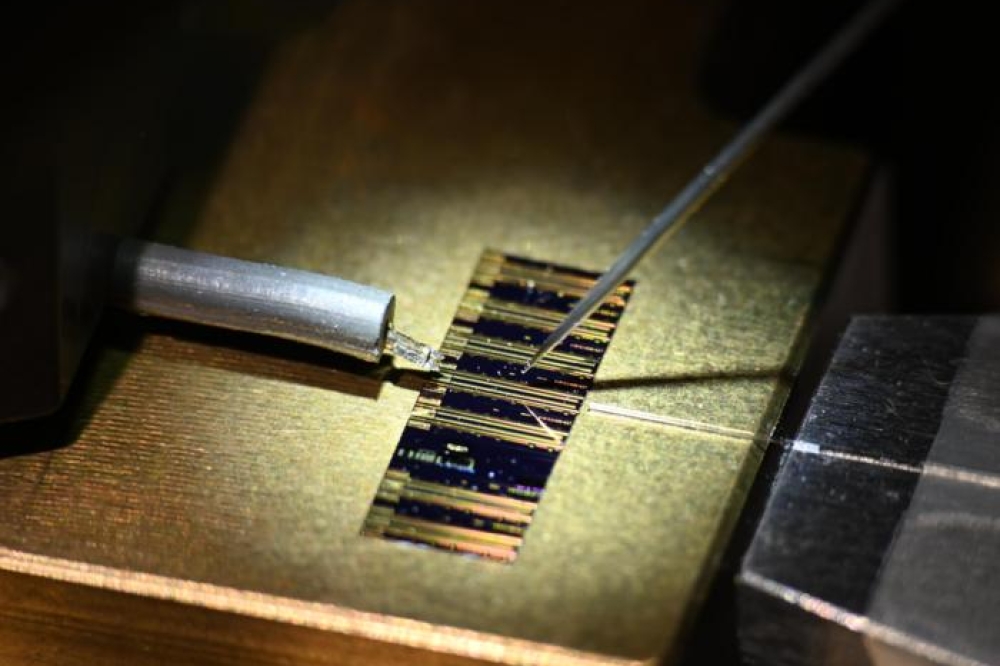

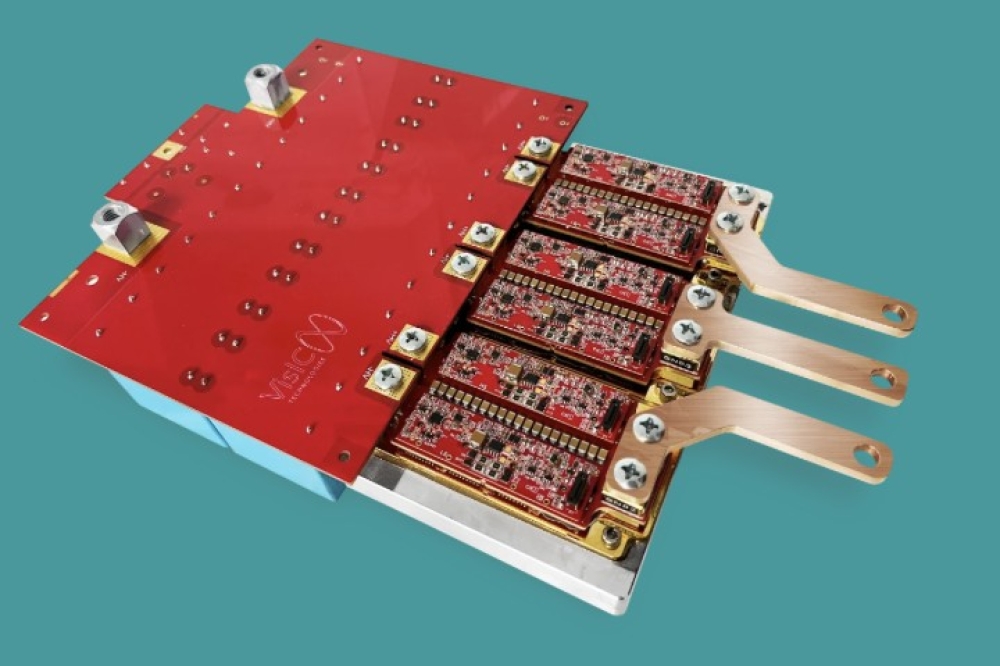

3-D schematic showing MEMS-electronic-photonic heterogeneous integration (Credit: Niels Quack)

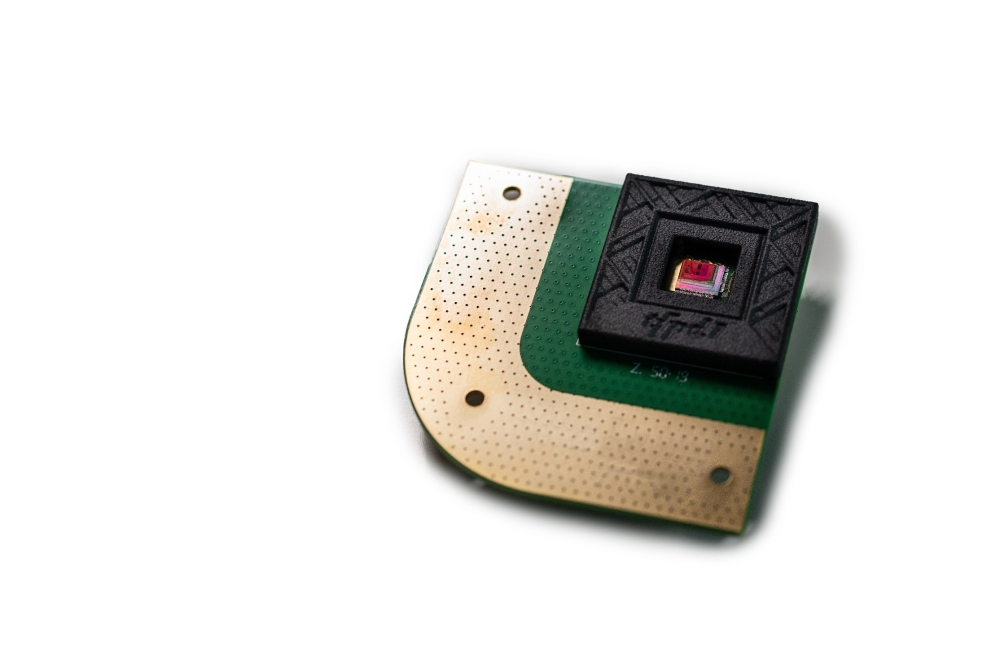

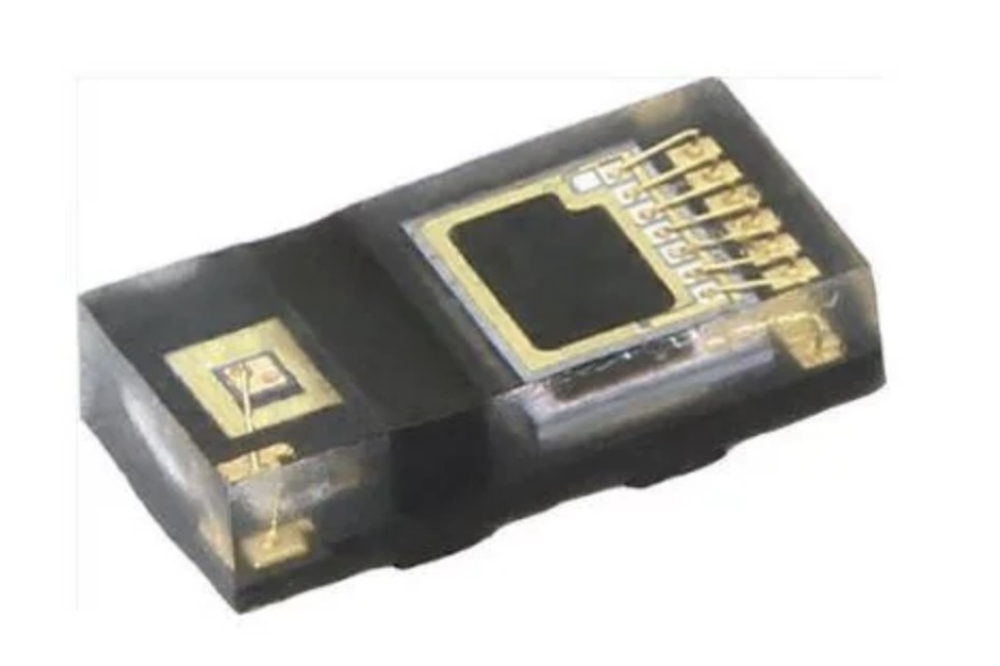

To avoid the drawbacks of size, power and cost, the Berkeley team exploited a class of lasers called MEMS tuneable VCSELs. MEMS (micro-electrical-mechanical system) parts are tiny micro-scale machines that, in this case, can help to change the frequency of the laser light for the chirping, while VCSELs (vertical-cavity surface-emitting lasers) are a type of inexpensive III-V integrable semiconductor lasers with low power consumption. By using the MEMS device at its resonance - the natural frequency at which the material vibrates - the researchers were able to amplify the system’s signal without a great expense of power.

“Generally, increasing the signal amplitude results in increased power dissipation,” Behroozpour says. “Our solution avoids this tradeoff, thereby retaining the low power advantage of VCSELs for this application.”

The team’s next plan includes integrating the VCSEL, photonics and electronics into a chip-scale package. Consolidating these parts should open up possibilities for “a host of new applications that have not even been invented yet,” Behroozpour says - including the ability to use your hand, Kinect-like, to silence your ringtone from thirty feet away.

Behnam Behroozpour will describe the team’s work at CLEO: 2014, in presentation AW3H.2, titled, “Method for Increasing the Operating Distance of MEMS LIDAR beyond Brownian Noise Limitation,” which will take place on Wednesday, June 11th at 4:45 p.m. in Room 210H of the San Jose Convention Centre

The Conference on Lasers and Electro - Optics (CLEO) is being held from June 8th to 13th in San Jose, California, USA at San Jose Convention Centre.

A conceptual vision for an integrated 3D camera with multiple pixels using the FMCW laser source (Credit: Behnam Behroozpour)

The new system, developed by researchers at the University of California, Berkeley, can remotely sense objects across distances as long as thirty feet, ten times farther than what could be done with comparable current low-power laser systems.

With further development, the technology could be used to make smaller, cheaper 3-D imaging systems that offer exceptional range for potential use in self-driving cars, smartphones and interactive video games like Microsoft’s Kinect, all without the need for big, bulky boxes of electronics or optics.

“While metre-level operating distance is adequate for many traditional metrology instruments, the sweet spot for emerging consumer and robotics applications is around ten metres,” or just over thirty feet, says UC Berkeley’s Behnam Behroozpour.“This range covers the size of typical living spaces while avoiding excessive power dissipation and possible eye safety concerns.”

The new system relies on LIDAR (light radar), a 3-D imaging technology that uses light to provide feedback about the world around it. LIDAR systems of this type emit laser light that hits an object, and then can tell how far away that object is by measuring changes in the light frequency that is reflected back. It can be used to help self-driving cars avoid obstacles halfway down the street, or to help video games tell when you are jumping, pumping your fists or swinging a racket at an imaginary tennis ball across an imaginary court.

In contrast, current lasers used in high-resolution LIDAR imaging can be large, power-hungry and expensive. Gaming systems require big, bulky boxes of equipment, and you have to stand within a few feet of the system for them to work properly, Behroozpour says. Bulkiness is also a problem for driverless cars such as Google’s, which must carry a large 3-D camera on its roof.

The researchers sought to shrink the size and power consumption of the LIDAR systems without compromising their performance in terms of distance.

In their new system, the team used a type of LIDAR called frequency-modulated continuous-wave (FMCW) LIDAR, which they felt would ensure their imager had good resolution with lower power consumption, Behroozpour says. This type of system emits “frequency - chirped” laser light (that is, whose frequency is either increasing or decreasing) on an object and then measures changes in the light frequency that is reflected back.

3-D schematic showing MEMS-electronic-photonic heterogeneous integration (Credit: Niels Quack)

To avoid the drawbacks of size, power and cost, the Berkeley team exploited a class of lasers called MEMS tuneable VCSELs. MEMS (micro-electrical-mechanical system) parts are tiny micro-scale machines that, in this case, can help to change the frequency of the laser light for the chirping, while VCSELs (vertical-cavity surface-emitting lasers) are a type of inexpensive III-V integrable semiconductor lasers with low power consumption. By using the MEMS device at its resonance - the natural frequency at which the material vibrates - the researchers were able to amplify the system’s signal without a great expense of power.

“Generally, increasing the signal amplitude results in increased power dissipation,” Behroozpour says. “Our solution avoids this tradeoff, thereby retaining the low power advantage of VCSELs for this application.”

The team’s next plan includes integrating the VCSEL, photonics and electronics into a chip-scale package. Consolidating these parts should open up possibilities for “a host of new applications that have not even been invented yet,” Behroozpour says - including the ability to use your hand, Kinect-like, to silence your ringtone from thirty feet away.

Behnam Behroozpour will describe the team’s work at CLEO: 2014, in presentation AW3H.2, titled, “Method for Increasing the Operating Distance of MEMS LIDAR beyond Brownian Noise Limitation,” which will take place on Wednesday, June 11th at 4:45 p.m. in Room 210H of the San Jose Convention Centre

The Conference on Lasers and Electro - Optics (CLEO) is being held from June 8th to 13th in San Jose, California, USA at San Jose Convention Centre.