News Article

Next-generation infrared detectors on silicon

Growing infrared detectors on large area substrates would enhance capabilities in astronomy, remote detection and medical diagnosis

Cheaper, larger and better infrared detectors grown on silicon wafers could give more scientists access to infrared astronomy and further spur the hunt for exoplanets and the study of the universe's acceleration.

.jpg)

Closer to home, the same technology could also advance remote sensing and medical imaging.

The National Science Foundation (NSF) has awarded the Rochester Institute of Technology $1.2 million to develop, fabricate and test a new family of detectors grown on silicon wafer substrates by US-based imaging systems developer, Raytheon Visions Systems.

"If this is successful, the astronomy community will have a ready supply of affordable detectors that could be deployed on a wider range of facilities," says Don Figer, director of the Center for Detectors at RIT and lead scientist on the project. "Right now infrared detectors are so expensive that there are only a few on the world's biggest telescopes - Keck, Gemini, the Very Large Telescope.”

“These are the only facilities that can afford them, and then they can only afford a few. They have big telescopes with big focal planes and tiny detectors in the middle," he adds.

Advancing infrared detectors using silicon wafers will leverage the existing infrastructure built around the semiconductor industry and drive down the cost of building detectors.

"The collaboration with RIT leverages over a decade of technological advancements Raytheon has made in manufacturing large format MBE/Si focal planes," says Elizabeth Corrales, program manager at Raytheon Vision Systems. "Infrared detectors with lower cost focal planes and improved performance will push the boundaries of infrared astronomy and continue Raytheon's 30-year service to the astronomy community."

Cost constraints limit the availability and scale of the current detector technology, which use small, scarcely produced cadmium zinc telluride wafers.

"Today, a typical state-of-the-art device has 2,048 by 2,048 pixels at a cost around $350,000 to $500,000," Figer points out. "Detectors on large telescopes can cost a significant fraction of the total instrument budget. Very large, affordable infrared arrays will be essential for making optimum use of the proposed 30-metre class ground-based telescopes of the future."

"The key to making larger - up to 14,000 by 14,000 pixels - and less expensive infrared detectors lies in using silicon wafer substrates, since large silicon wafers are common in the high-volume semiconductor industry and their coefficient of thermal expansion is well-matched to that of the silicon readout circuits," Figer says.

For the last 15 years, scientists have pursued the use of silicon substitutes in the quest for large infrared detectors.

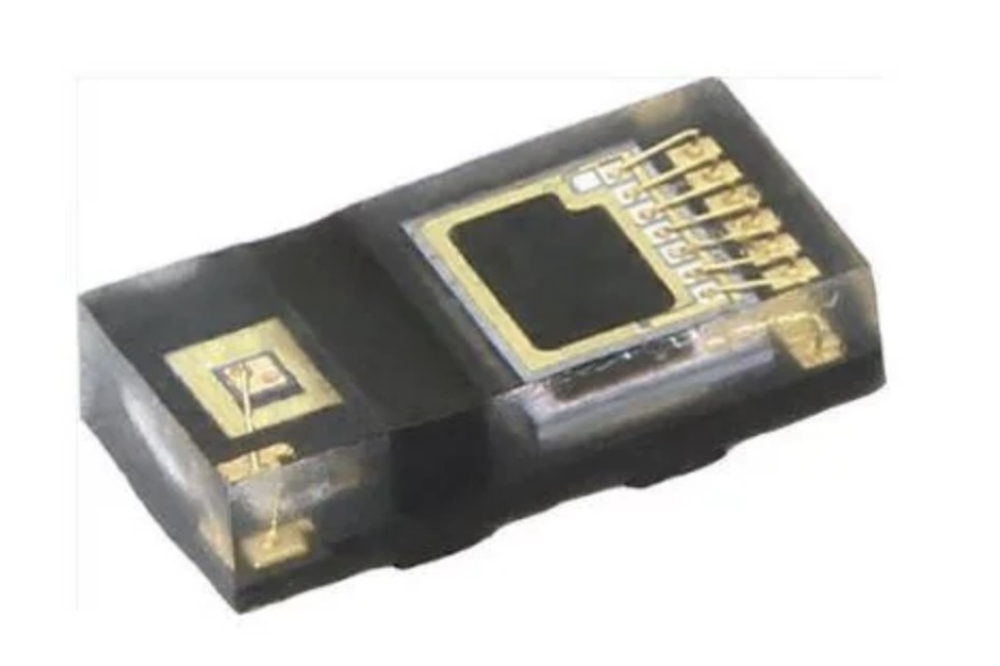

Until now, the crystal lattice mismatch between silicon and infrared materials has stymied advancement, causing defects that generate higher dark current, and thus higher noise, reduced quantum efficiency and increased image persistence.

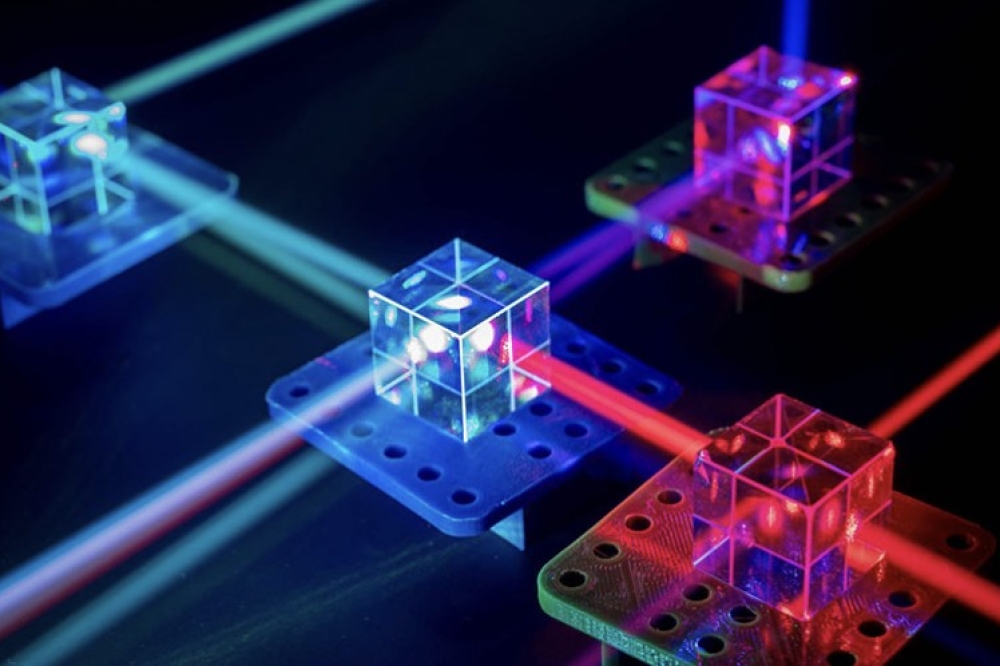

Atoms in a silicon crystal are spaced closer together than those in infrared light-sensitive materials.

When the infrared material is grown on the silicon, defects are generated. Photo-generated charge that represents the signal can get stuck and lost, or pop out of the lattice and show up as a phantom signal. The difference in atomic spacing can create the false signal.

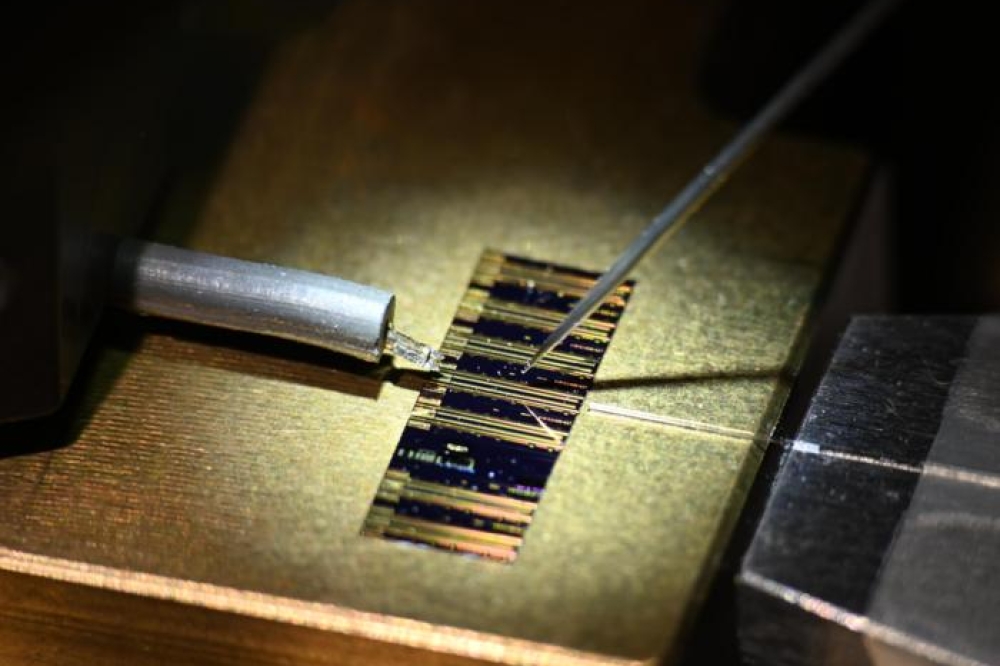

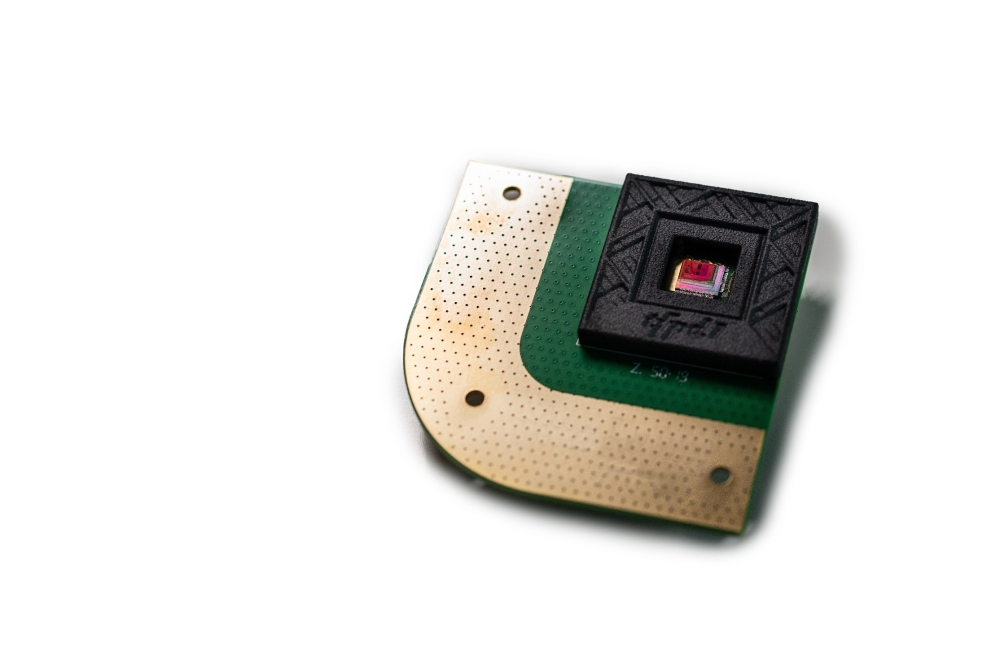

Raytheon has developed the prototype detector technology using molecular beam epitaxy to deposit light-sensitive material onto silicon substrates while maintaining high vacuum throughout the many steps in the process.

"Raytheon has come up with an innovation to combine the silicon wafer with the mercury cadmium telluride light-sensitive layer in a way that eliminates all these bad effects," Figer says. "Our proposal is to do a fabrication run of parts based on this new technology and then evaluate the technology in the laboratory and on a telescope."

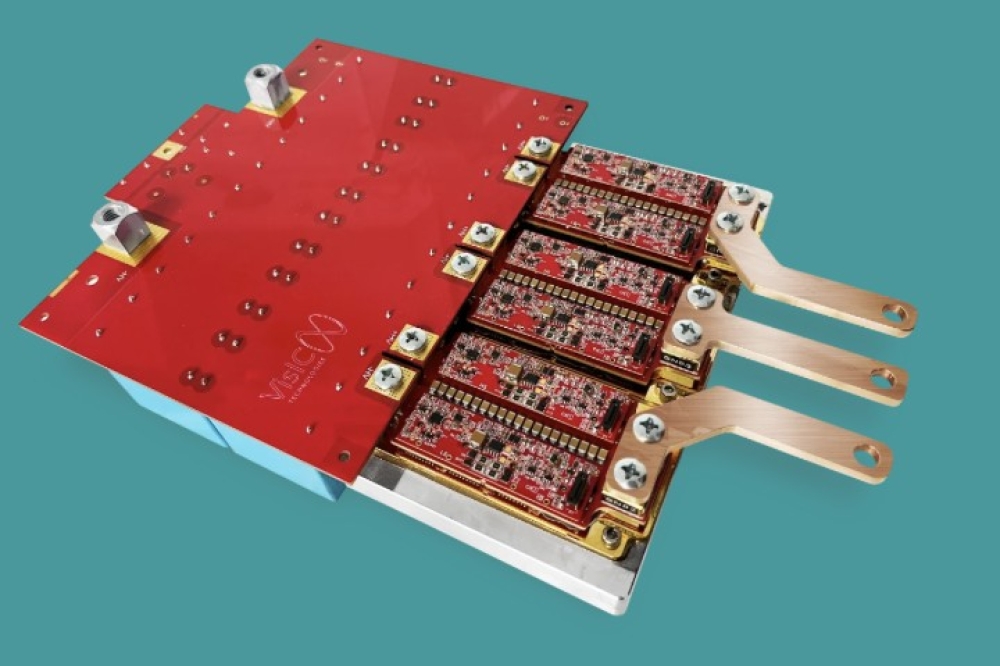

RIT and Raytheon will design and fabricate arrays of 1,024 by 1,024 pixels and 2,048 by 2,048 pixels and test them in the laboratories of the Center for Detector.

"Not only are silicon wafers much more affordable, but they can be made in much larger sizes because the wafers are now big," Figer notes. "Instead of being a four-inch wafer, it can be 12 inches, for instance. We can make a 14,000-by-14,000-pixel detector. That has not been done. It could end up dominating the field in infrared detectors for the next 20 years."

Noise can obscure signals coming from the faint objects in the universe.

Figer's team will measure the detector performance using a system based on one he designed for the Space Telescope Science Institute to measure the performance of detectors to be flown on the James Webb Telescope.

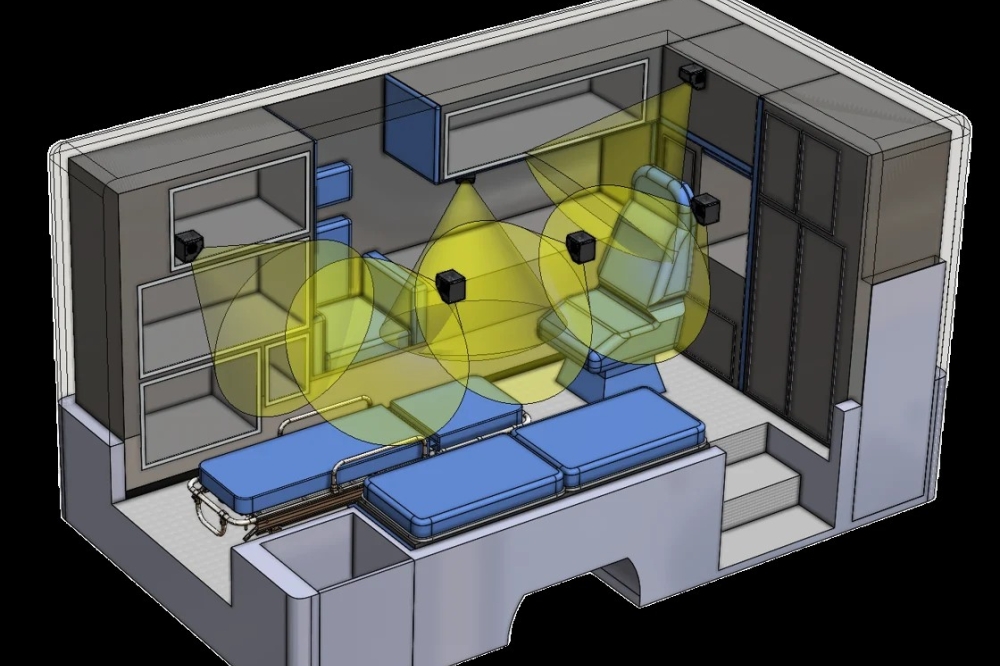

Figer will also develop a new light-tight detector housing to keep the detector optically and thermally isolated from everything around it.

The box-within-a-box design is cooled to 60 Kelvin (-3500 F) to reduce the glow, or blackbody radiation, emitted from warmer objects around the detector and prevent additional noise.

The NSF funding to develop the technology will carry Figer and his team to the second phase of the project and the design of a much bigger device on the scale of 4,000 by 4,000 pixels. An international consortium of organisations is needed to fund the fabrication of these larger detectors.

"I am going around the world talking to directors of observatories currently in existence and future observatories and asking them if they'd like to join a consortium of organisations, each of which contributes to, and benefits from, the development of the first run of 4K parts," he says. "This is the intermediate step before having a final product."

During the third and final phase of the program, Figer foresees RIT and Raytheon building an instrument for a large telescope.

"One of the strategic goals for the Center for Detectors is to start a big astronomical instrumentation program at RIT," he concludes. "There are only a handful of programs like that in the world. It's very competitive but it's also very fulfilling to both deploy the technology and use it for science in an astronomical instrument."

.jpg)

Closer to home, the same technology could also advance remote sensing and medical imaging.

The National Science Foundation (NSF) has awarded the Rochester Institute of Technology $1.2 million to develop, fabricate and test a new family of detectors grown on silicon wafer substrates by US-based imaging systems developer, Raytheon Visions Systems.

"If this is successful, the astronomy community will have a ready supply of affordable detectors that could be deployed on a wider range of facilities," says Don Figer, director of the Center for Detectors at RIT and lead scientist on the project. "Right now infrared detectors are so expensive that there are only a few on the world's biggest telescopes - Keck, Gemini, the Very Large Telescope.”

“These are the only facilities that can afford them, and then they can only afford a few. They have big telescopes with big focal planes and tiny detectors in the middle," he adds.

Advancing infrared detectors using silicon wafers will leverage the existing infrastructure built around the semiconductor industry and drive down the cost of building detectors.

"The collaboration with RIT leverages over a decade of technological advancements Raytheon has made in manufacturing large format MBE/Si focal planes," says Elizabeth Corrales, program manager at Raytheon Vision Systems. "Infrared detectors with lower cost focal planes and improved performance will push the boundaries of infrared astronomy and continue Raytheon's 30-year service to the astronomy community."

Cost constraints limit the availability and scale of the current detector technology, which use small, scarcely produced cadmium zinc telluride wafers.

"Today, a typical state-of-the-art device has 2,048 by 2,048 pixels at a cost around $350,000 to $500,000," Figer points out. "Detectors on large telescopes can cost a significant fraction of the total instrument budget. Very large, affordable infrared arrays will be essential for making optimum use of the proposed 30-metre class ground-based telescopes of the future."

"The key to making larger - up to 14,000 by 14,000 pixels - and less expensive infrared detectors lies in using silicon wafer substrates, since large silicon wafers are common in the high-volume semiconductor industry and their coefficient of thermal expansion is well-matched to that of the silicon readout circuits," Figer says.

For the last 15 years, scientists have pursued the use of silicon substitutes in the quest for large infrared detectors.

Until now, the crystal lattice mismatch between silicon and infrared materials has stymied advancement, causing defects that generate higher dark current, and thus higher noise, reduced quantum efficiency and increased image persistence.

Atoms in a silicon crystal are spaced closer together than those in infrared light-sensitive materials.

When the infrared material is grown on the silicon, defects are generated. Photo-generated charge that represents the signal can get stuck and lost, or pop out of the lattice and show up as a phantom signal. The difference in atomic spacing can create the false signal.

Raytheon has developed the prototype detector technology using molecular beam epitaxy to deposit light-sensitive material onto silicon substrates while maintaining high vacuum throughout the many steps in the process.

"Raytheon has come up with an innovation to combine the silicon wafer with the mercury cadmium telluride light-sensitive layer in a way that eliminates all these bad effects," Figer says. "Our proposal is to do a fabrication run of parts based on this new technology and then evaluate the technology in the laboratory and on a telescope."

RIT and Raytheon will design and fabricate arrays of 1,024 by 1,024 pixels and 2,048 by 2,048 pixels and test them in the laboratories of the Center for Detector.

"Not only are silicon wafers much more affordable, but they can be made in much larger sizes because the wafers are now big," Figer notes. "Instead of being a four-inch wafer, it can be 12 inches, for instance. We can make a 14,000-by-14,000-pixel detector. That has not been done. It could end up dominating the field in infrared detectors for the next 20 years."

Noise can obscure signals coming from the faint objects in the universe.

Figer's team will measure the detector performance using a system based on one he designed for the Space Telescope Science Institute to measure the performance of detectors to be flown on the James Webb Telescope.

Figer will also develop a new light-tight detector housing to keep the detector optically and thermally isolated from everything around it.

The box-within-a-box design is cooled to 60 Kelvin (-3500 F) to reduce the glow, or blackbody radiation, emitted from warmer objects around the detector and prevent additional noise.

The NSF funding to develop the technology will carry Figer and his team to the second phase of the project and the design of a much bigger device on the scale of 4,000 by 4,000 pixels. An international consortium of organisations is needed to fund the fabrication of these larger detectors.

"I am going around the world talking to directors of observatories currently in existence and future observatories and asking them if they'd like to join a consortium of organisations, each of which contributes to, and benefits from, the development of the first run of 4K parts," he says. "This is the intermediate step before having a final product."

During the third and final phase of the program, Figer foresees RIT and Raytheon building an instrument for a large telescope.

"One of the strategic goals for the Center for Detectors is to start a big astronomical instrumentation program at RIT," he concludes. "There are only a handful of programs like that in the world. It's very competitive but it's also very fulfilling to both deploy the technology and use it for science in an astronomical instrument."