Coffee cup or travel mug?

Ams Osram has announced a dual VCSEL-based direct Time-of-Flight (dToF) infrared sensor that can tell whether an espresso cup or a travel mug is placed under a coffee machine, ensuring the right amount is dispensed every time.

This kind of precision is also critical for a broad spectrum of applications, from logistics robots that distinguish between nearly identical packages, to camera systems that maintain focus on moving objects in dynamic video scenes.

“The new dToF sensor supports precise 3D detection and differentiation in diverse applications — without a camera and with stable performance across varying targets, distances, and environmental conditions,” says David Smith, product marketing manager at Ams Osram.

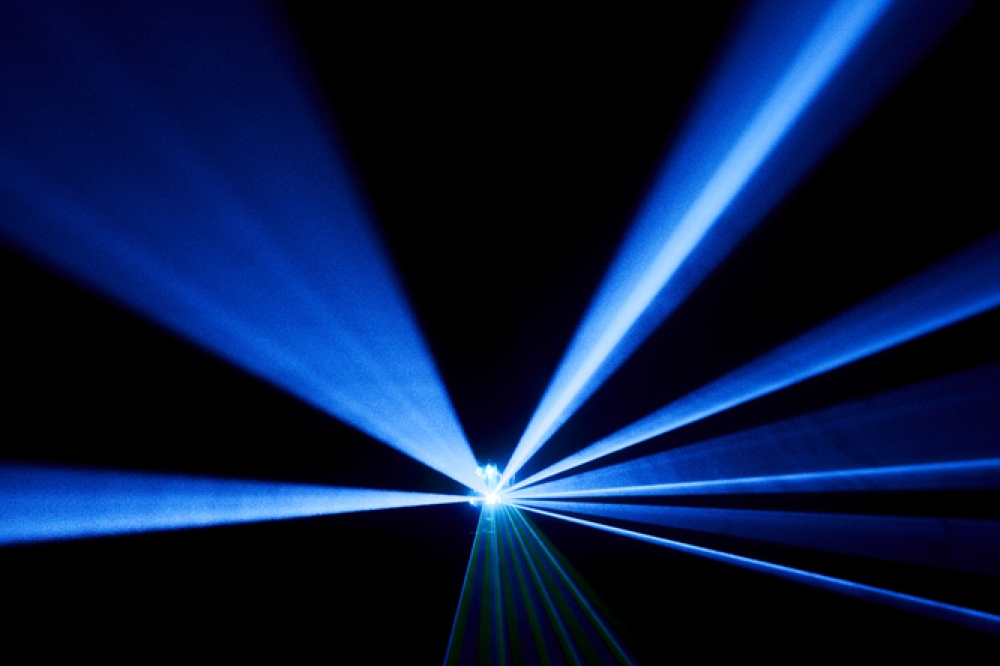

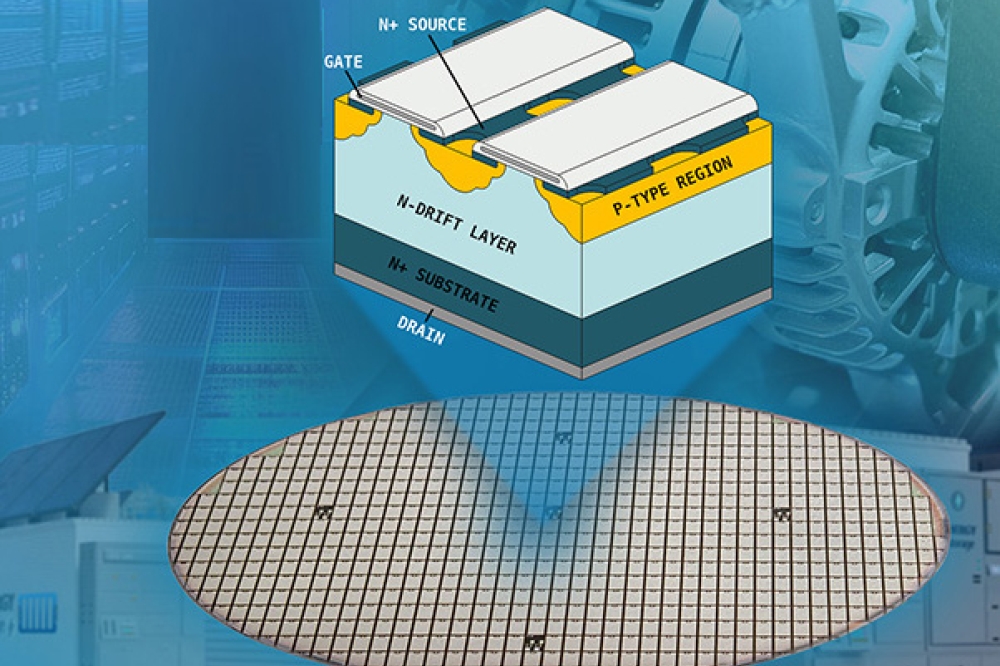

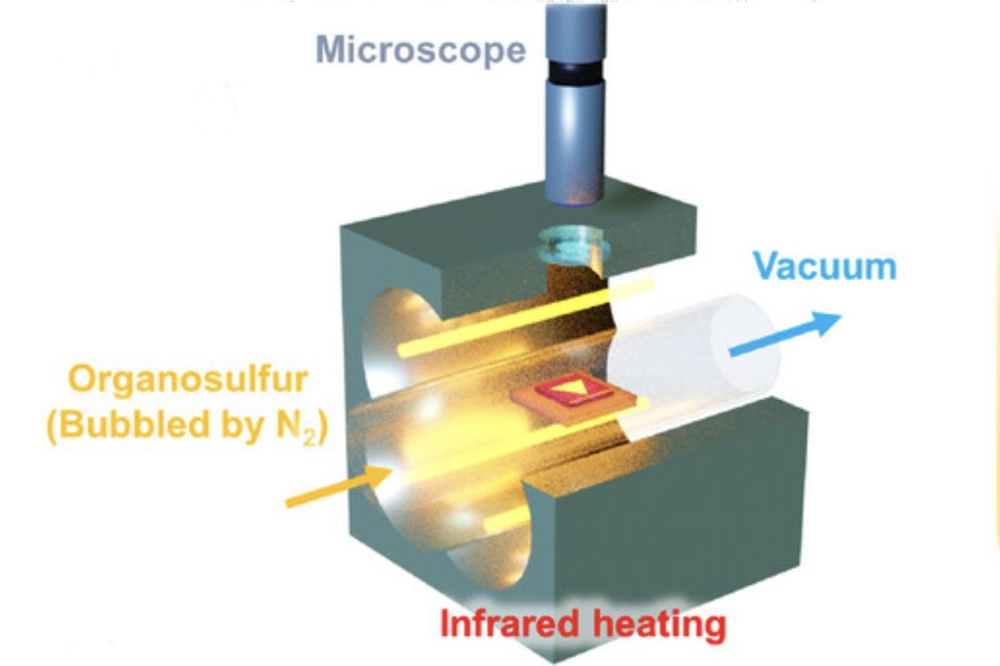

The sensor emits light pulses in the IR range, which reflect from objects in the sensor’s field of view and return to the sensor, which calculates the distance based on the time it takes for the light to travel. Multi-zone sensors enhance this by capturing reflected light from multiple viewing angles (zones), like a network of echo points. This enables the creation of detailed 3D depth maps.

The TMF8829 divides its field of view into up to 1,536 zones — a significant improvement over the 64 zones in standard 8x8 sensors, according to the company. This higher resolution enables finer spatial detail. For example, it supports people counting and presence detection in smart lighting systems, object detection and collision avoidance in robotic applications, and intelligent occupancy monitoring in building automation. The detailed depth data also provides a foundation for machine learning models that interpret complex environments and enable intelligent interaction with surroundings.

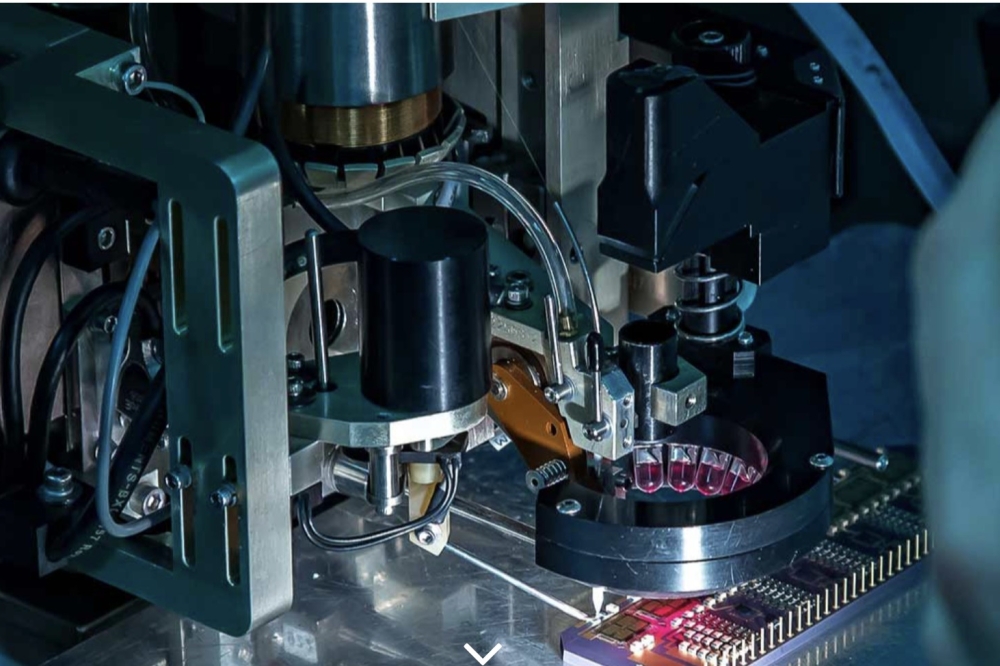

Measuring 5.7 × 2.9 × 1.5 mm, the TMF8829 operates without a camera and so supports privacy-sensitive applications. When paired with a camera, the sensor enables hybrid vision systems like RGB Depth Fusion, combining depth and colour data for AR applications such as virtual object placement.

The sensor measures distances up to 11 meters with 0.25 mm precision — sensitive enough to detect a finger swipe. With its 48×32 zones, the sensor covers an 80° field of view, delivering depth information across a scene comparable to that of a wide-angle lens.

On-chip processing reduces latency and simplifies integration. Instead of relying on a single signal, the sensor builds a profile of returning light pulses to identify the most accurate distance point — ensuring stable performance even with smudged cover glass. Full histogram output supports AI systems in extracting hidden patterns or additional information from the raw signal.